We hoped that offering a scenario would make this a

realistic representation of the research process. In addition to asking

questions about the sample guides, the questionnaires were used to gather basic

demographic information, as well as information about prior experience with the

library’s research guides and the student’s typical research habits. The

questionnaire was piloted with two library student employees to test the

wording of the questions.

Our twenty research participants were from the HCI

course titled, “User Interface Design and Development” at Ithaca College. They

were required to participate in the study as part of an assignment. We obtained

IRB approval from Ithaca College to conduct the usability study. More

demographic detail is provided in the results section, below.

The testing took place in a private room in the

library. The students were randomly assigned to either the subject or course

guide testing group. Two members of the web team conducted each interview. The

guides were shown to the participant on an iMac computer in the Firefox browser

at a 1280 x 720 window size. The action on the screen and the audio of the

interview were recorded using Camtasia. Students were informed that they were

being recorded and that these recordings would be viewed by their classmates,

but assured that no one beyond the class and the research team would be able to

view them.

Two team members were present at each interview: one

set up the workstation and conducted the interview while the other took notes.

At the start of each usability interview, one web team member read a script

explaining the purpose of the study (see Appendix A). Students were encouraged

to think aloud and ask questions during the interviews. Each interview took

20-35 minutes.

During the two weeks after the interviews were

completed, each student was required to view four of the recorded sessions.

Students who had served as participants in the course guide group viewed only

other course guide sessions, while those in the subject group viewed only

subject guide sessions.

As the final phase of the study, members of the web

team were invited by the professor to attend four 50-minute class sessions.

During the first two sessions, each student gave an analysis of the research

guides based on the usability test that she or he reviewed. On the third day,

members of the web team met with the students in small groups to discuss

possible design changes to the guides and to develop a list of best practices.

During the final class period, the class came together to combine their design

changes and best practices. The web team then summarized their findings for the

class and also asked for feedback about the interview procedures used in the

usability test.

Results

What the Usability Interviews Told Us

Demographics

The student participants (n=20) represented more than

ten different majors and six minors. Fifteen majored or minored in computer

science. There were 13 men and seven women. The majority of the students were

upperclassmen: 18 were juniors and seniors. Just under half (n=9) of the

students had been in a class with a librarian before, but only seven had

visited the library’s research help desk (no correlation to those who had had

library instruction). A little more than half (n=12) of the students stated

that they knew there was a subject librarian for their major.

Students’ Research Process

When the students were asked to describe their

approach to research, 11 students mentioned using Google as a first step, and

15 mentioned library resources such as databases, journal articles, and books.

The latter number might have been inflated because the students were talking to

librarians. When asked if they had used a subject or course guide before, only

five participants answered yes. This number may not be generalizable to the

campus as a whole, given that nearly one third of the students (n=6) were

computer science majors. This department does not often request library

instruction. Those who had used guides discovered them through various methods

including library instruction, recommendation by a professor, or the library

website.

The answers to the open-ended questions about the

research process were varied, as subjects interpreted the questions

differently. The only general trend was that students expect to find library

related resources (e.g., books, journals, and databases) on the subject and

course guides. The students found databases to be the most useful tools on the

subject and course guides.

Comparisons Between Guides

The last section of the questionnaire asked the

students to compare the guides (within either the subject or course group)

side-by-side in terms of the use of images, multimedia, internal navigation,

length of guide, and resource description. Again, there was no clear signal in

these results—the students were split on what they liked and did not like.

Images & Video

This area of the study was noteworthy for the sharp

division of opinions among students. Some students (n=13) appreciated images

(“It makes me feel comfortable, like I’m in the right place”), while others

(n=7) considered them wasted space (“I don’t think it adds much”). Images that

served to aid navigation (e.g., biology guide, right side) were generally

approved of, but purely decorative images (e.g., biology guide, top) were

sometimes questioned.

Video had a similarly mixed reception. A video showing

how to use the microfilm machine garnered some praise, but a mislabeled video

from YouTube caused considerable confusion. Several students stated

categorically that they would not click on videos (Hintz et al., 2010, found

similar results).

Icons indicating database features were not popular.

They were regarded as either confusing (“I know what ‘GET IT’ means when it’s

next to an article, but I don’t understand why it’s here”) or just unnecessary

(regarding the lock icon indicating that authentication is needed: “I have to

log into everything anyway”).

Internal Navigation & Organization

Just over half of the students (n=12) liked having a

table of contents (TOC). One student noted that a TOC “really helps to break

down the page so I don’t have to scroll through.” Regarding organization,

students appreciated that there was organization, but they noted the great

variance and disharmony between the schemes used in different guides.

Length

We asked about students’ preferences regarding length

of guides and length of resource annotations. In some cases, a single student

would espouse different viewpoints depending on the context in which she was asked.

Regarding the length of guides, many students (n=10)

preferred shorter guides whereas some (n=7) preferred longer guides. When

presented with very long guides, students sometimes felt “overwhelmed” (this

word came up frequently) but they also felt greater confidence in the

thoroughness of the guide (“I don’t feel like I’d have to go elsewhere”).

Interestingly, half of the students (n=10) pointed out that length is not an

issue as long as there is good navigation and organization.

Similarly, many students (n=13) said they preferred

minimal annotations, but when they encountered cases where there were longer

annotations with search tips, etc., they tended to react positively. One

student suggested that the length of a description might depend on the resource:

“If a paragraph is necessary, okay, but for common knowledge like the New York

Times, don’t bother.”

What the Classroom Discussion Told Us

The four class sessions in which students presented

their analyses and recommendations proved very helpful. Below are some themes

that emerged from the discussions.

Consistency of Layout

Students attached more importance to consistency of

layout than expected. They repeatedly stressed the need for at least some

commonality of experience in going from one guide to the next. Specific areas

where students felt greater consistency would be helpful included:

• Navigational elements - TOC,

back-to-top link.

• Contact information in a

consistent location.

• Common supplemental

information - e.g., citation styles, plagiarism tutorial.

• Common search boxes -

catalog, article quick search (they liked the ability to perform a search right

from the page itself).

• Overall format - guides

should avoid the “all items by type” pluslet.

Organization

The most common criticism of the guides was that they

were poorly organized, or at least that the organizational scheme was neither

apparent to the user nor consistent with other guides of the same type.

In the case of the subject guides, one guide that we

studied was organized by resource type (handbook, almanac, encyclopedia, etc.),

while the other was organized topically. The students noted this inconsistency,

many of them favoring the latter organizational scheme. This tendency has been

previously noted in the literature (Sinkinson et al., 2012; Sonsteby &

DeJonghe, 2013).

Another organizational issue concerned the role of the

narrower (right-hand) column. Students could not detect any pattern for why

some things were in the left (main) column and others were in the right.

Several students found themselves ignoring the right column. This is consistent

with studies showing that people read a screen in an F-shaped pattern (Nielsen,

2006). The students suggested that the right column be used primarily for

supplemental information.

Students appreciated strong visual divisions between

organizational units (i.e., smaller pluslets rather than single long ones). On

the other hand, students did not like a large number of pluslets that each

contained only a link or two.

Hierarchy

Students felt the most important content should be at

the top. By “most important,” they usually meant databases. They appreciated

the short “principal databases” boxes at the tops of some guides (e.g.,

anthropology). One suggestion was to list three top databases and have a “more”

link that would reveal additional databases.

Internal Navigation

Navigation within the page was very important to the

students. They appreciated TOCs, but mentioned some ways that they could be

better:

• TOCs should be set off such

that they are distinct from other pluslets.

• TOCs should be consistent

across guides.

• For complex pages, TOCs could

appear as a collapsible, Windows Explorer-style tree.

Discussion

Limitations

While working with students in a course that focused

on interface design and usability testing provided valuable feedback, this

created a very non-representative sample. For example, 30% of the participants

were majors and seventy-five percent were minors in Computer Science, a very

small department at Ithaca College. Also, 90% of the students were

upperclassmen. It is possible that freshman and sophomores may interact with

our research guides differently.

We discovered during the usability testing and analysis

that we should have piloted the questionnaire with more students. Using only

two students who worked in the library did not help to uncover problems with

many of our questions. For example, we later learned that using a search

scenario for each subject or course page (e.g., “muckrakers” was the topic for

the journalism course guide; “polygamy” for the anthropology guide) was not

helpful. It was stressful for students, as they often felt limited by their

knowledge of that particular topic. Some students scrolled through the guides

or used the browser’s “find on page” feature to look for the specific topic

word. More thorough pre-testing of the questions could have avoided this

problem.

Libraries & Computer Science

The ubiquity of smartphones with their touch screen

interfaces has led to a renewed focus on interface design within the fields of

computer science and computer science education. While once primarily the focus

of web developers, interface design is now a major component of software development.

This has led to an increased number of computer science departments offering

courses in HCI and integrating HCI into more general courses.

One of the specific skills covered in an HCI course is

usability testing. Usability testing involves setting up a testing location

with the software to be tested and bringing people in to use said software. The

testers are given a basic introduction and asked to perform various tasks. The

best way to give students an opportunity to learn about usability tests is to

have them take part in the process. For this reason, computer science classes

often bring people in from outside to act as clients for the students.

Given the increasing number of HCI classes and the

desirability of real world clients for the students in these classes to work

with, collaborations between the library and computer science departments

should be possible at many institutions.

This study benefitted both the web team and the HCI

class. By taking part in the usability tests described in this paper, students

learned many of the skills needed to run their own tests, which they were

required to do later in the semester. They had an increased understanding of

the awkwardness felt by subjects and the importance of the testing environment.

The transition to analyzing the data showed them the difference between what

they felt during the tests, what they said in response to questions, and what

the testers saw. By working with the library’s web team, the students were able

to get a better grasp of how usability tests happen in the real world instead

of just an academic description of the best case scenario or a toy example in

class.

Running the usability tests described here was time

consuming but otherwise relatively inexpensive. The only software purchased was

Camtasia for Mac. Screen capture is not absolutely essential, though it can

prove useful for later review of material. What we describe in this paper is

only one way to run these tests and what we discovered about the best way for

Ithaca to develop research guides. Any studies run to improve research guides

are likely to prove beneficial.

Changes Resulting From the Study

Following this study, the web team created simple

guidelines that all research guide authors must follow (see Appendix B). These

guidelines were greatly influenced by the classroom discussions with the

students.

The web team decided that a few pluslet types should

be included in every guide, with a fixed location for each:

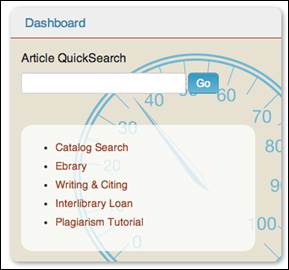

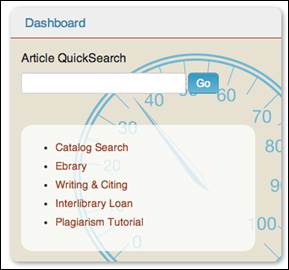

• “dashboard” (see below)

• contact information

• table of contents

• best bets

The “dashboard” is a newly designed pluslet that

contains the following elements:

• “article quick search”

search box

• link to the catalog

• link to ebrary

• link to citation information

page

• link to interlibrary loan

• link to the plagiarism

tutorial

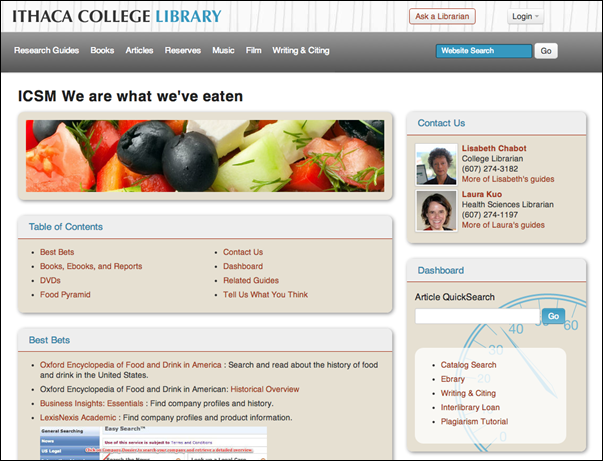

Figure 2

Dashboard pluslet.

These were elements that the team felt should be on

every guide, and already were on many guides, but in different locations and

contexts. Placing features in a recognizable configuration and in the same

place on every guide makes these important services easier for students to

discover (Roth, Tuch, Mekler, Bargas-Avila, & Opwis, 2013). A consistent

background image was used for this pluslet to make it stand out. Librarians can

add this pluslet to a guide by a simple drag-and-drop on the SubjectsPlus back

end.

The contact information pluslet includes the subject

librarian’s email, title, phone number, and a link to more guides created by

that author. Prior to the usability study, librarians could place this

information anywhere on the guide. The students informed us that it should be

placed prominently and in the same location on all guides.

The TOC pluslet auto-populates with internal links to

all other pluslets on a guide. The TOC was an item that students found highly

desirable, so the team wanted to make this a consistent element and easy for

librarians to implement.

Students consistently told us that they found

databases to be the most useful resources on the research guides (similar results

were observed by Ouellette, 2011; Sonsteby & DeJonghe, 2013; Staley, 2007). They also liked the set of

“principle databases” located on the top of the anthropology guide. As a

result, each guide is required to include a “best bets” pluslet just below the

TOC. This pluslet should contain links to a few of the most important

databases. For very short guides, this feature is optional.

Regarding organization, the SubjectsPlus administrator

disabled the “all items by type” pluslet. As a result, librarians will have to

determine their own organizational scheme for each guide, based on the needs of

the particular class or discipline. This should limit the use of a type-based

organizational scheme, which students did not find helpful.

The web team instituted a rule that primary content

should be in the left (larger) column, with the right (smaller) column reserved

for supplementary information. Of course, the opinions of librarians as to what

is primary versus supplemental may vary, so examples were provided in the

guidelines. Relegating less important material to the right hand column makes

sense for a responsive site, since the right column will drop below the left

when viewed at a narrow screen width, for instance on a smartphone.

Due to the divided opinion of the students with regard

to images, they were neither required nor discouraged in the guides. The

guidelines do specify a recommended aspect ratio for images used at the top of

a guide. This allows librarians the creative freedom to use images if they feel

it is appropriate, but encourages a standard practice that produces visual

consistency across guides.

Visit http://ithacalibrary.net/research/lkuo/2013/ to view images of the research guides evaluated in the study and their

revisions using the new guidelines.

Conclusion

The research guide usability testing and classroom

discussions were successful as they helped the web team to generate simple

guidelines for all librarians at Ithaca College to follow. Working with the HCI

course provided invaluable insight into both design and organizational issues.

It is hoped that other libraries will consider some of these suggested

practices.

Student responses during the usability testing were highly

varied. The classroom focus groups helped clarify and underscore what the

participants were actually trying to say. With the usability study we were able

to observe the students interacting with our guides, while the discussion

allowed for an in-depth conversation about students’ preferences. We recommend

the combination of a usability study and group discussion.

A study of this nature is very time consuming, but

justified by the work that librarians devote to the construction and

maintenance of research guides. Linking this study to work with members of the

Computer Science Department also proved valuable as it provided feedback from

an outside source. It also offered some additional knowledge of how to run such

studies that should benefit future usability testing. Collaboration with

academic departments is a great marketing opportunity for a library, since it

allows librarians the opportunity for interaction with students and faculty

members, and stresses that librarians are actively working to better meet their

needs.

Perhaps the most interesting finding from this study

is that the students value consistency across guides. Doing research is hard

work for both the novice and expert. Providing research guides with a

consistent layout simplifies the initial steps. However, students have diverse

preferences and personalities, so studies like this one are unlikely to reveal

a single path to successful research that works for all students. Therefore,

guides should be designed with these varying needs and skills in mind

(Sinkinson et al., 2012). Content of the guides is dependent on the discipline

and should be left to the expertise of the subject librarian.

We hope that the students at Ithaca College will

benefit from the newly designed subject guides. We will continue to test the

implemented changes with students to ensure the guides’ usefulness.

References

Courtois, M. P., Higgins, M. E., & Kapur, A. (2005). Was this guide

helpful? Users’ perceptions of subject guides. Reference Services Review, 33(2),

188–196. doi: 10.1108/00907320510597381

Ghaphery, J., & White, E. (2012). Library use of Web-based research

guides. Information Technology & Libraries, 31(1), 21-31.

Gonzalez, A. C., & Westbrock, T. (2010). Reaching out with

LibGuides: Establishing a working set of best practices. Journal of Library

Administration, 50(5/6), 638–656. doi: 10.1080/01930826.2010.488941

Hintz, K., Farrar, P., Eshghi, S., Sobol, B., Naslund, J., Lee, T.,

Stephens, T., & McCauley, A. (2010). Letting students take the lead: A

user-centred approach to evaluating subject guides. Evidence Based Library

& Information Practice, 5(4), 39–52.

Jackson, R., & Pellack, L. J. (2004). Internet subject guides in

academic libraries: An analysis of contents, practices, and opinions. Reference

& User Services Quarterly, 43(4), 319–327.

Little, J. J., Fallon, M., Dauenhauer, J., Balzano, B., & Halquist,

D. (2010). Interdisciplinary collaboration: A faculty learning community

creates a comprehensive LibGuide. Reference Services Review, 38(3), 431–444.

Retrieved 5 Nov. 2013 from http://www.emeraldinsight.com/10.1108/00907321011070919

McMullin, R., & Hutton, J. (2010). Web subject guides: Virtual

connections across the university community. Journal of Library Administration,

50(7-8), 789–797. doi: 10.1080/01930826.2010.488972

Nielsen, J. (2006). F-shaped pattern for reading Web content. Retrieved

6 Nov. 2013 from http://www.nngroup.com/articles/f-shaped-pattern-reading-web-content/

Ouellette, D. (2011). Subject guides in academic libraries: A

user-centred study of uses and perceptions. Canadian Journal of Information

& Library Sciences, 35(4), 436–451.

Reeb, B., & Gibbons, S. (2004). Students, librarians, and subject

guides: Improving a poor rate of return. portal: Libraries and the Academy,

4(1), 123–130. doi: 10.1353/pla.2004.0020

Roth, S. P., Tuch, A. N., Mekler, E. D., Bargas-Avila, J. A., &

Opwis, K. (2013). Location matters, especially for non-salient features–An

eye-tracking study on the effects of web object placement on different types of

websites. International Journal of Human-Computer Studies, 71(3), 228–235. doi:

10.1016/j.ijhcs.2012.09.001

Santos, B. S., Dias, P., Silva, S., Ferreira, C., & Madeira, J.

(2011). Integrating user studies into computer graphics-related courses. IEEE

Computer Graphics and Applications, 31(5), 14–17. doi: 10.1109/MCG.2011.78

Sinkinson, C., Alexander, S., Hicks, A., & Kahn, M. (2012). Guiding

design: Exposing librarian and student mental models of research guides.

portal: Libraries & the Academy, 12(1), 63–84. doi: 10.1353/pla.2012.0008

Sonsteby, A., & DeJonghe, J. (2013). Usability testing, user-centered

design, and LibGuides subject guides: A case study. Journal of Web

Librarianship, 7(1), 83–94. doi: 10.1080/19322909.2013.747366

Staley, S. M. (2007). Academic subject guides : A case study of use at San José State University. College & Research

Libraries, 68(2), 119–139.

Strutin, M. (2008). Making research guides more useful and more well

used. Issues in Science and Technology Librarianship, Fall(55). Retrieved 7

Nov. 2013 from http://www.istl.org/08-fall/article5.html

Vileno, L. (2007). From paper to electronic, the evolution of

pathfinders: A review of the literature. Reference Services Review, 35(3),

434–451. doi:

10.1108/00907320710774300

Appendix A

Questionnaires

Usability Testing Fall 2012 - Subject Guides

Thank you for participating in our usability study of

the Library’s Course and Subject Guides. Our purpose is not to test you

personally but to uncover problems all our users face when conducting course

related research. So try not to feel self-conscious about any difficulties you

run into, since these are exactly what we’re trying to identify. If at any

point you are not sure what we are asking, please let us know so that we can

clarify our question. We value your honest opinion tremendously and believe

that student feedback is what’s needed to help us improve our research guides.

Don’t be shy! We really want to know what you think of our guides.

0. What is your major/minor?

1. What is your year of study?

2. Have you had a class with a librarian before at

Ithaca College?

3. Have you been to the research help desk for

assistance?

4. Did you know that there is a subject librarian for

your major?

5. What is your research strategy for beginning a term

paper? (You have to write a paper on Fracking. How would you start?)

Subject librarians at IC Library

construct guides to particular subject areas to help students who are writing

papers in those areas. [Bring up a random subject guide to demonstrate.]

6. Have you used a library subject guide before? [If

no, skip to *] When did you use it? (early in the research process or later?)

6a. Which guides have you used before?

6b. Are there guides that you have used repeatedly?

6c. Can you show me the guide(s)?

6d. How did you find out about the guide?

6e. Have you ever searched for a guide that wasn’t

first shown to you? (e.g., if you’d used a music guide, and were assigned a

psychology paper, did you look for a psychology guide?) [If no, skip to *]

6f. Did you find the guide useful? What did you find

useful about it?

*Anthropology Guide

7a. What would you expect to find on a subject guide

for anthropology?

[Bring up Anthropology

guide]

You have to write a research paper on polygamy. Please take your time to look

over this guide.

7b. How might you use this guide as part of your

research process?

7c. What are the three most useful tools for you on

this guide?

Biology Guide

8a. What would you expect to find on a subject guide

for biology?

[Bring up Biology guide]

You have to write a research paper on RNA. Please take a moment to look over

this guide.

8b. How might you use this guide as part of your research process?

8c. What are the three most useful tools on this guide?

Comparison of Guides

[Show anthropology and

biology guides in the same browser in different tabs]

Which guides do you prefer in terms of:

9a. use of images and/or multimedia

9b. internal navigation (TOC)/organization

9c. length of guide

9d. resource descriptions

10. content: do you feel the resources you need to do

research are there?

Navigation

11. Please find a guide on psychology.

Usability Testing Fall 2012 - Course Guides

Thank you for participating in our usability study of

the Library’s Course and Subject Guides. Our purpose is not to test you

personally but to uncover problems all our users face when conducting course

related research. So try not to feel self-conscious about any difficulties you

run into, since these are exactly what we’re trying to identify. If at any

point you are not sure what we are asking, please let us know so that we can

clarify our question. We value your honest opinion tremendously and believe

that student feedback is what’s needed to help us improve our research guides. Don’t

be shy! We really want to know what you think of our guides.

0. What is your major and minor?

1. What is your year of study?

2. Have you had a class with a librarian before at

Ithaca College?

3. Have you been to the research help desk for assistance?

4. Did you know that there is a subject librarian for

your major?

5. What is your research strategy for beginning a term

paper? (You have to write a paper on Fracking. How would you start?)

Subject librarians at IC Library often

construct guides for particular classes that highlight resources that students

may find useful. [Bring up a random course guide to demonstrate.]

6. Have you used a library course guide before? [If

no, skip to *] When did you use it? (early in the research process or later?)

6a. If yes, which guides have you used before?

6b. Are there guides that you have used repeatedly?

6c. Can you show me the guide(s)?

6d. How did you find out about the guide?

6e. Have you ever searched for a guide that wasn’t first shown to you? (e.g.,

if you’d used a sociology course guide, and were assigned a psychology paper,

did you look for a psychology course guide?) [If no, skip to *]

6f. Did you find the guide useful? What did you find

useful about it?

*We Are What We’ve Eaten

You have to write a research paper on the banana trade

in Central America. Please take a moment to look over this guide.

[Bring up “We Are What We’ve

Eaten” guide.]

7a. How might you use this guide as part of your

research process?

7b. What are the three most useful tools on this

guide?

Journalism History

You have to write a research paper on early-20th

century American “muckrakers.” Please take a moment to look over this guide.

[Bring up Journalism History

guide]

8a. How might you use this guide as part of your

research process?

8b. What are the three most useful tools on this

guide?

Blues

You have to write a research paper on the influence of

African American sacred music on the blues. Please take a moment to look over

this guide.

[Bring up the Blues guide]

9a. How might you use this guide as part of your

research process?

9b. What are the three most useful tools on this

guide?

Comparison of Guides

[Show the three guides side

by side in same browser in different tabs]

Which guides do you prefer in terms of:

10a. use of images and/or multimedia

10b. internal navigation (TOC)/ organization

10c. length of guide

10d. resource descriptions

11. content: do you feel the resources you need to do

research are there

Navigation

12. Please find a psychology course guide.

Appendix B

Recommendations/Guidelines for Subject and Course

Guides

- “Contact Us” pluslet in the upper right corner

- Table of contents is the first non-image pluslet.

Use the TOC pluslet; don’t make your own. Optional if guide is less than

900px tall.

- There should be a “Best Bets” area near top of

guide (but below TOC). This should contain links to major resources and/or

custom search boxes. Optional for very short guides.

- Revise all guides to not use the “All Items by

Source” pluslet.

- Left column should contain primary content.

- Right column should contain supplemental content

including, but not limited to:

- Dashboard (directly under subject specialist)

- Custom Content may include related guides,

selected journals/RSS, Associations, Help documents.

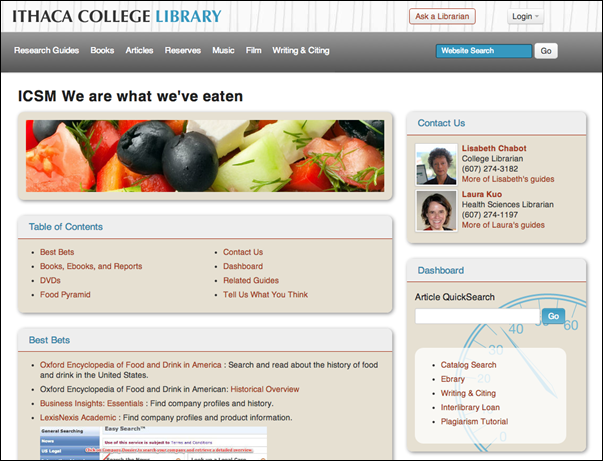

Figure 3

Post-revision version of

the “We Are What We’ve Eaten” guide, showing implementation of the new

guidelines.

![]() 2013 Cobus-Kuo, Gilmour, and Dickson.

This is an Open Access article distributed under the terms of the Creative

Commons‐Attribution‐Noncommercial‐Share Alike License 2.5 Canada (http://creativecommons.org/licenses/by-nc-sa/2.5/ca/),

which permits unrestricted use, distribution, and reproduction in any medium,

provided the original work is properly attributed, not used for commercial

purposes, and, if transformed, the resulting work is redistributed under the

same or similar license to this one.

2013 Cobus-Kuo, Gilmour, and Dickson.

This is an Open Access article distributed under the terms of the Creative

Commons‐Attribution‐Noncommercial‐Share Alike License 2.5 Canada (http://creativecommons.org/licenses/by-nc-sa/2.5/ca/),

which permits unrestricted use, distribution, and reproduction in any medium,

provided the original work is properly attributed, not used for commercial

purposes, and, if transformed, the resulting work is redistributed under the

same or similar license to this one.