Research Article

Systematic Review Research Guides and

Support Services in Academic Libraries in the US: A Content Analysis of

Resources and Services in 2023

Elizabeth A. Sterner

Assistant Professor, Health

Sciences & Science Librarian

University Libraries,

Northern Illinois University

DeKalb, Illinois, United

States of America

Email:

esterner@niu.edu

Received: 15 July 2023 Accepted: 20 Dec. 2023

![]() 2024 Sterner.

This is an Open Access article distributed under the terms of the Creative

Commons‐Attribution‐Noncommercial‐Share Alike License 4.0

International (http://creativecommons.org/licenses/by-nc-sa/4.0/),

which permits unrestricted use, distribution, and reproduction in any medium,

provided the original work is properly attributed, not used for commercial

purposes, and, if transformed, the resulting work is redistributed under the

same or similar license to this one.

2024 Sterner.

This is an Open Access article distributed under the terms of the Creative

Commons‐Attribution‐Noncommercial‐Share Alike License 4.0

International (http://creativecommons.org/licenses/by-nc-sa/4.0/),

which permits unrestricted use, distribution, and reproduction in any medium,

provided the original work is properly attributed, not used for commercial

purposes, and, if transformed, the resulting work is redistributed under the

same or similar license to this one.

DOI: 10.18438/eblip30405

Abstract

Objective

– The purpose of this research project was to examine the state of library

research guides supporting systematic reviews in the United States as well as

services offered by the libraries of these academic institutions. This paper

highlights the informational background, internal and external educational

resources, informational and educational tools, and support services offered

throughout the stages of a systematic review.

Methods – The methodology centered on a content analysis review

of systematic review library research guides currently available in 2023. An

incognito search in Google as well as hand searching were used to identify the

relevant research guides. Keywords searched included: academic library

systematic review research guide.

Results

– The analysis of 87 systematic review library research guides published in

the United States showed that they vary in terms of resources and tools shared,

depth of each stage, and support services provided. Results showed higher

levels of information and informational tools shared compared to internal and

external education and educational tools. Findings included high coverage of

the introductory, planning, guidelines and

reporting standards, conducting searches, and reference management stages. Support

services offered fell into three potential categories: consultation and

training; acknowledgement; and collaboration and co-authorship. The most

referenced systematic review software tools and resources varied from

subscription-based tools (e.g., Covidence and DistillerSR) to open access tools (e.g., Rayyan and abstrackr).

Conclusion – A systematic review library research guide is not the type of research

guide that you can create and forget about. Librarians should consider the

resources, whether educational or informational, and the depth of coverage when

developing or updating systematic review research

guides or support services. Maintaining a systematic review research guide and

support service requires continual training and maintaining familiarity with

all resources and tools linked in the research guide.

Introduction

Systematic

reviews use explicit methods to combine information from multiple studies while

minimizing bias and highlighting the quality of included studies to produce a

reliable, reproducible summary that informs decision making, e.g., how

effective a certain drug treatment might be (Cochrane, 2020). Tsafnat et al.

(2014) identified 15 methodical steps that systematic reviews tend to follow:

formulate the review question, find previous systematic reviews, write the

protocol, devise the search strategy, search, deduplicate search results,

screen abstracts, obtain full-text, screen full-text, snowball, extract data,

synthesize data, re-check literature, meta analyze, and write up the review. Of

these steps, academic librarians can support searching for existing systematic

reviews and developing research questions and objective, reproducible search

strategies (preparation), finding relevant citations and deduplicating

citations (retrieval), and writing portions, specifically methodology, of the

final report (write-up). Interest in systematic reviews has been growing since

the 1980s (Chalmers & Fox, 2016). Hoffmann et al. (2021) calculated a

“20-fold increase in the number of SRs indexed” between 2000 and 2019 (p. 1).

With this increase in the number of systematic reviews published per year, it

is unsurprising that academic librarians have and are developing and

maintaining systematic review research guides and systematic review support

services to meet the current needs of researchers interested in publishing

systematic reviews.

Literature Review

Several

researchers have demonstrated a positive association between librarians or

search specialists as members of systematic review teams and improved search

quality (Koffel, 2015; Li et al., 2014; Meert et al., 2016; Rethlefsen et al.,

2015). Authors of many published studies have focused on the roles and support

that health science librarians contribute to systematic review teams. Beverley

et al. (2003) identified the transitioning role of information professionals in

the systematic review process as “quality literature filterers, critical

appraisers, educators, disseminators, and even change managers” (p. 65).

Rethlefsen et al. (2015) demonstrated significantly better reported search

strategies and search documentation in systematic reviews with a librarian or

information specialist identified as a co-author. Spencer

and Eldredge (2018) identified the central roles librarians support on

systematic review teams, e.g., searching, source selection, and teaching, as

well as less documented roles. A less documented role included supporting

“formalized systematic review services” (Spencer & Eldredge, 2018, p. 50).

Going forward, the “role of librarian as expert searcher may be evolving into

the role of librarian as systematic review automation expert” (Laynor, 2022, p.

104). Early testing of ChatGPT’s effectiveness at creating Boolean queries for

systematic reviews has noted ChatGPT’s inaccurate development of medical

subject heading (MeSH) terms and “high variability in query effectiveness

across multiple requests” (Wang et al., 2023, p. 14). Since much of the

research confirmed the importance of librarians in systematic review processes,

it is no surprise that they create research guides to support their work and

the people with whom they work.

In addition to

the extensive knowledge required, systematic reviews are also time-consuming

regarding searching. Bullers et al. (2018) calculated medical librarians spent

an average of 26.9 hours per project (median of 18.5 hours) on systematic

reviews. These researchers determined librarians spent considerable time on

search strategy development, translation, and writing. When surveyed, Canadian

university health science librarians identified lack of time and insufficient

training as barriers to their ability to support systematic reviews (Murphy

& Boden, 2015). As systematic reviews grow more popular in other fields,

librarians that serve other disciplines are faced with similar challenges.

Toews (2019) investigated the roles of veterinary librarians at universities

and colleges and found library policies, insufficient training, and limited

time as barriers to participation in systematic reviews. Lackey et al. (2019)

presented their own case of their efforts to develop and launch a for-fee

systematic review core in their library and in the process became “…fully

integrated into the campus research infrastructure” (p. 591). These researchers

maintained accurate data of time spent working on projects and concluded the

for-fee service increased demand for and their ability to support systematic

review services. These knowledge and time requirements inform the types of

systematic review support services that can or should be offered by a health

science librarian.

Academic

librarians use research guides to share library resources and services. With a

focus on pedagogical research guide design, Stone et al. (2018) determined that

organizing resources around the how and why of the research process in

comparison to a pathfinder design enhanced student learning. Bergstrom-Lynch

(2019) developed a working set of best practices for creating both

user-friendly and learner-friendly research guides and concluded that a more

effective instructional learning-centred research guide could be developed by

focusing on measurable learning objectives. Data from Lee et al. (2021)

suggested that “library guides on systematic reviews currently serve as

information repositories rather than teaching tools” (p. 73).

One question

that needs to be asked, however, is which tools, resources, and services are

being highlighted. This research provides insight into the current state of

research guides, noting information they include and exclude on the research

guides. Highlights include features that may not seem obvious as well as

consideration for what type of service, if any, could be provided by academic

librarians in support of systematic review projects. As a result, this content

analysis can aid the efforts of librarians developing or updating systematic

review research guides and services at their own institutions.

Aims

In this content

analysis, I surveyed systematic review library research guides and summarized

the tools, resources, and services identified or provided by academic libraries

in the United States in support of the systematic review process. The project

was borne of this researcher's need to develop a systematic review research

guide and support service. Initially unsure of how to approach the topic, I

developed this project to minimize some unknown answers and potential biases

during the research guide and service development phase. The aim of this

project was not to critique current systematic review research guides or make

decisions for librarians creating systematic review research guides or

developing a systematic review support service but to highlight the benefits of

different considerations illuminated in the findings of this study and this

author’s experience in developing a systematic review research guide and

corresponding support service. The following questions guided the survey of

systematic review library research guides and services:

RQ1: Which tools

and resources are health science librarians sharing in support of systematic

reviews?

RQ2: How much

and to what extent are health science librarians covering the stages of a

systematic review?

RQ3: What

services are librarians offering in support of systematic reviews?

RQ4: What

decisions might librarians need to consider when developing systematic review

research guides?

Methods

A content

analysis method was selected to complete this study. According to White and

Marsh (2006), “content analysis is a highly flexible research method that has

been widely used in library and information science (LIS) studies with varying

research goals and objectives” (p. 22). The benefits of content analysis include

flexibility of research design, qualitative and quantitative analyses, and that

a content analysis is considered “unobtrusive, unstructured, and context

sensitive” (Harwood & Garry, 2003, p. 493). Kim and Kuljis (2010) “…found

that applying content analysis to Web-based content is a relatively easy

process that allows researchers to perform and prepare data at their

convenience and to avoid lengthy ethics approval procedures” (p. 374). This

work built on that by Lee et al. (2021), whose methods were informed by Yoon

and Schultz (2017). Yoon and Shultz developed a system to analyze academic

libraries’ websites regarding research data management services. Lee et al.

conducted a content analysis of 18 systematic review library guides from

English-speaking institutions throughout the world and found heavily

informational systematic review guides with opportunity to improve the

instructional and skills-focused content.

Sample

An incognito

Google search was performed on 3 March 2023. There were approximately

285,000,000 results. Reviewing was considered completed when ten results in a

row were out of scope of the eligibility criteria. This occurred at exactly the

200th result. This initial search of (academic library systematic

review research guide) identified 151 results that were reviewed for inclusion

eligibility. A record was kept of the referrals from each systematic review

research guide to others. If these referred research guides were not yet listed

in the initial results and met the inclusion criteria, they were added to the

list. The remaining four results were located by hand searching using this

process. Data extraction occurred from 6 March to 22 March 2023. After 22 March

2023, no additional research guides were added to the list and data collection

was considered finalized. The data collection of Carnegie Classifications

(American Council on Education, 2024) occurred during this same period and

concluded on 22 March 2023.

To be eligible

for inclusion in this study, research guides had to be produced by academic

libraries located within the United States with a focus on systematic reviews.

If a research guide only focused on a narrative literature review, not a

systematic review, it was excluded. Only one research guide per university was

counted. If multiple were available, the research guide serving the most

advanced students (e.g., graduate students) or faculty with the most in-depth,

thorough information was selected as the sample from that institution. Research

guides geared toward the health sciences were selected if there were multiple

research guides serving multiple subjects at the same in-depth level. Only

research guides written in English and published by academic institutions in

the United States were included.

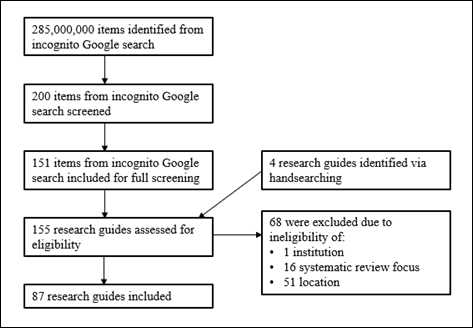

A total of 155

systematic review research guides were reviewed for this study (Figure 1). An

Excel table including introductory, planning phase, guidelines and reporting

standards, reference management, screening, data extraction, critical analysis

was used to synthesize the articles. Of the 155 research guides screened for

eligibility, 82 were found during the initial search and five were further

included during hand searching. A total of 87 research guides are included in

this review. Citations were managed in Zotero.

Figure 1

Flow diagram.

Data Collection

The methods used in this review were informed by the

work of Lee et al. (2021), whose work was informed by Yoon and Schultz (2017).

The categories of content analysis developed by Lee et al. included education (internal),

education (external), information, service, tool (educational), and tool

(informational) and were used as a guide to notate presence or absence of each

item, not the number of each item. Each guide was reviewed for the absence or

presence of the above-listed categories. For example, the presence of

informational tools was noted, but not that there were two or four tools on a

given guide. Individual tools, resources, and services were noted in an

in-depth review of the stages of a systematic review. The stages of review

collected were informed by the work of Tsafnat et al. (2014) and the work of

Lee et al. In-depth data collected were assigned to stages listed in Table 1.

The category labeled “Guidelines and Reporting Standards” was also used to

include dissemination of a published work due to potential requirements of

specific standards for publication. Additional data collected for each guide

included any referrals from other research guides, the geographic location,

date updated, public or private status, Carnegie Classification status, and

levels of services provided, if applicable (Table 2).

Table 1

Description of

Categories by Stage

|

Stage |

Description |

|

Introductory |

Definitions, examples, other

types of reviews |

|

Planning Phase |

Time and team requirements,

question development including question frameworks and eligibility criteria,

protocol registration |

|

Guidelines and Reporting

Standards |

Examples of either, and

documentation of search and results in preparation for dissemination of work |

|

Conducting Searches |

Developing search

strategies, links to databases and grey literature, saving search strategies |

|

Reference Management |

Examples related to

deduplicating search results, finding full-text, management of resources |

|

Screening |

Software including potential

cost |

|

Critical Appraisal |

Including risk of bias,

quality of reporting, tools |

|

Data Extraction |

Examples and resources |

Table 2

Data Collection

Template

|

General

Information (Text

Input) |

Referred

to by (name of institutions) |

|

Link |

|

|

Name of

institution |

|

|

Location |

|

|

Last

updated |

|

|

Private/public

institution |

|

|

Carnegie

classification |

|

|

Types

of Information (Presence or Absence) |

Education

- internal |

|

Education

- external |

|

|

Information |

|

|

Service |

|

|

Tool -

educational |

|

|

Tool - information |

|

|

Tiers

of Service (Text Input) |

Consultation

and training; acknowledgement; collaboration and co-authorship |

|

Specific

Resources (e.g., names of tools) Related to the Stages of Systematic Reviews (Text

Input) |

Introductory |

|

Planning

phase |

|

|

Guidelines

and reporting standards |

|

|

Conducting

searches |

|

|

Reference

management |

|

|

Screening |

|

|

Critical

appraisal |

|

|

Data extraction |

Results

A total of 87

research guides met the inclusion criteria and were included in this review. Of

the 155 systematic review library research guides appraised in this study, 68

were excluded for eligibility criteria (1 not a university, 16 did not cover

systematic reviews, 51 located outside of the United States). The 87 research

guides included in this study represented locations across the entire United

States (Central Plains – 5; Mid Atlantic – 12; Midwest – 17; North Atlantic

–14; Pacific Northwest – 1; Rocky Mountains – 2; South Central – 8; Southeast –

17, West Coast – 11), but leaned heavily toward R1 Carnegie designated

institutions (64; R2 – 11; special focus 5; without designation – 7) and public

academic institutions (61; 26 private, nonprofit academic institutions). As a

group, these guides were regularly updated with 55 guides (63.2%) most recently

updated in 2023 (21 in 2022; 2 in 2021; 9 without a date). Some research guides

also directed users to other systematic review research guides outside of their

institutions. The three most referenced research guides were created by Cornell

University, University of Michigan, and University of North Carolina Chapel

Hill. Of the 87 research guides reviewed, there were 10 guides (11.5%) that

covered multiple areas. The subject area focus of the research guides included

health science and biomedical (68), education (2), engineering (2), social

science (8), agriculture (4), business/economics (4), and no subject area

defined (19).

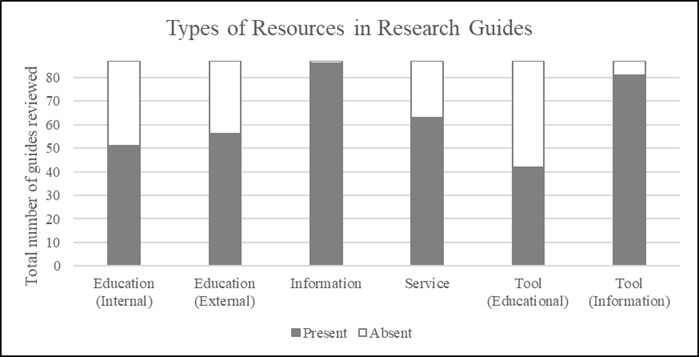

Each guide was reviewed for the absence or presence of

the following types of content: education (internal), education (external),

information, service, tool (educational), and tool (informational) (Figure 2).

In-depth information was collected for each stage. All but one research guide

(98.9%) provided information, but an informational listing of tools was more

common than an educational guide to the tools. Research guides were coded for

providing educational information for a tool (45; 51.7%), providing information

about a tool (1; 1.1%), or both (41; 47.1%). Additionally, external education

was slightly more common than internal education. Research guides provided

either internal education (18; 20.7%), external education (36; 41.4%), or both

internal and external education (33; 37.9%)

.

Figure 2

Types of content

resources present in reviewed research guides.

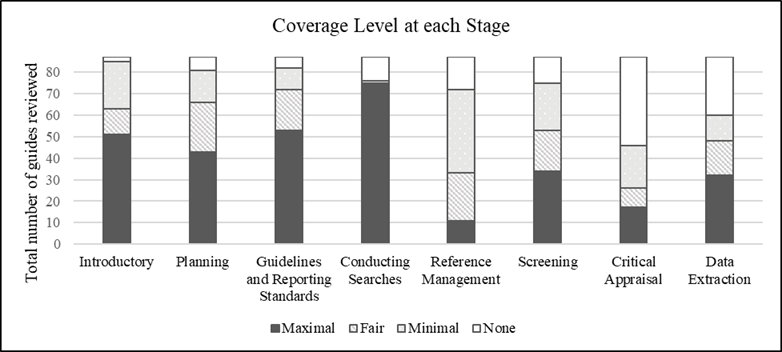

In-depth

detailed data were collected for each stage (Introductory, Planning, Guidelines

and Reporting Standards, Conducting Searches, Reference Management, Screening,

Critical Appraisal, and Data Extraction) and support services offered. After

this in-depth data collection, each guide was evaluated for the comparative

coverage level at each stage and noted as maximal, average, or minimal in

comparison to the other guides reviewed (Figure 3). Minimal coverage was

defined as one to two items, components, educational or informational tools or

resources provided. Fair coverage was defined as two to four items. Maximal

coverage was defined as five or more items. None was defined as no coverage at

all. Unsurprisingly, the most well covered stages were those that required

library resources or could benefit from librarian assistance, e.g., conducting

searches, and the least acknowledged stages included critical appraisal and

data extraction, two stages of evidence synthesis that typically require

clinical or topical expertise.

Figure 3

Coverage level

at each stage.

Of the 87

reviewed research guides, all 87 (100%) included “introductory” type of

information. The most referred to styles of reviews included the following:

systematic reviews,

meta-analyses,

scoping reviews, rapid reviews, and narrative reviews. The research guides that

covered the topic in greater detail included the following: critical reviews,

mapping reviews/systematic map, mixed studies reviews/mixed methods review,

overviews, qualitative systematic reviews/qualitative evidence synthesis,

state-of-the-art reviews, systematic search and reviews, systematized reviews,

and umbrella reviews. The most referenced source of information was Grant and

Booth (2009) and the "Review Methodologies Decision Tree" by Cornell

University Library (2023).

During the

planning phase, 27 research guides (31.0%) made mention of the concept of using

the question framework and refining a research question. Of the 87 research

guides reviewed, 57 (65.5%) referred to timeline and team building. The

timelines outlined lasted on average between 12-18

months. The most suggested teams included typically three or more team

members, including the principal investigator, context expert, two reviewers or

screeners, and an operations/project manager. Protocol registration was

included in 41 research guides (47.1%). The most referred to sources during the

planning phase included Preferred Reporting Items for Systematic Reviews and

Meta-Analyses (PRISMA), International Prospective Register of Systematic

Reviews (PROSPERO), and Open Science Framework (OSF). Although PRISMA is not

used to register a protocol, it was highlighted as a source to support protocol

development and reporting standards. Data extraction was coded for PRISMA but

not the specific extensions.

Of the 87

research guides surveyed, 83 research guides (85,4%) included information on

available standards and guidelines. The most referenced guidelines and

standards included the following: PRISMA, Cochrane, PROSPERO, OSF, JBI

(formerly the Joanna Briggs Institute), Campbell Collaboration, Institute of

Medicine (IOM), Agency for Healthcare Research and Quality Systematic Review

Data Repository (AHRQ SRDR), and Enhancing the

QUAlity and Transparency of Health Research (Equator) Network. PROSPERO and OSF

are not official standards or guidelines, but they are commonly used tools for

protocol registration, which is a common step in official standards and

guidelines. When included, PROSPERO and OSF were acknowledged in the context of

best practices of protocol development and registration. More detailed research

guides included potential sources for locating published systematic reviews,

e.g., Epistemonikos, a database of health evidence.

Only 9 research

guides (10.3%) did not provide any information related to conducting searches.

Guides typically included suggested databases (e.g., Embase, PubMed/Medline

(OVID), CINAHL Complete, Cochrane Library, Scopus, and Web of Science), sources

of grey literature (e.g., OpenGrey.eu, medRxiv, NIH RePORTER, and Global Index

Medicus), and suggested search tools (e.g., MeSH on Demand, Systematic Review

Accelerator: Polyglot Search, and Yale MeSH Analyzer). More detailed guides

contained additional databases that might be helpful for searching (e.g., ERIC,

PsycINFO, OTSeeker, and speechBITE).

Reference

management was highlighted in 74 research guides (85.1%). The most referenced

citation management tools were Zotero, EndNote, RefWorks, and Excel. Systematic

review software can also be used. The most referenced systematic review

software mentioned included Covidence and DistillerSR.

In terms of

screening, 74 research guides (85.1%) mentioned the use of systematic review

software. Commonly highlighted software includes those with institutional

subscriptions or fees (Covidence, DistillerSR, Cochrane RevMan, and

EPPI-Reviewer IV) as well as open access software (Rayyan, abstrackr, and

colandr). The SR Toolbox (Marshall et al., 2022) was also highlighted.

Critical

appraisal was covered in 60 research guides (69.0%). The most referenced

sources included the following: CASP checklists, Centre for Evidence-Based

Management (CEBM) Critical Appraisal Tools, Cochrane Risk of Bias (ROB) 2.0

Tool, Jadad Scale, LEGEND (Let Evidence Guide Every New Decision) Evidence

Evaluation Tools, AMSTAR (A MeaSurement Tool to Assess systematic Reviews)

checklists, AHRQ SRDR+, Grading of Recommendations Assessment, Development and

Evaluation (GRADE), JBI Critical Appraisal Tools, Newcastle-Ottawa Scale (NOS),

Scottish Intercollegiate Guidelines Network (SIGN), and PEDro Scale.

Fewer research

guides provided information or education on data extraction. Of the 46 guides

(52.9%) that provided information, the most frequently referred to information

included the following: example forms in Excel or Word and software (Covidence,

DistillerSR, JBI Sumari).

Of the 87 research guides, 63 research guides (72.4%)

made mention of services offered in support of evidence synthesis projects, and

24 did not (27.6%). Those offering support services fell into three categories:

offering consultation and training only (13; 16%), offering a two-tier option

of consultation and training as well as collaboration and co-authorship option

(32; 36.8%), and a three-tier option with consultation and training,

collaboration with acknowledgment, and collaboration with co-authorship (18;

20.7%).

Discussion

Tools and Resources Shared

With this research, I aimed to identify tools and

resources shared by librarians on systematic review research guides. The most

referenced systematic review software tools were Covidence, DistillerSR,

Cochrane RevMan, EPPI-Reviewer IV, Rayyan, abstrackr, and colandr. Several of

these tools are either specific to publications (e.g., Cochrane) or require a

subscription (e.g., Covidence and DistillerSR). For those institutions with

subscriptions, it was more common to find a link to a single source, e.g., DistillerSR.

For institutions without paid subscriptions, librarians creating these research

guides either only provided links to the free open access software or included

the subscription software with a note that it charged a fee. This directly tied

into the citation management software suggested in the research guides.

Subscription software can often handle citation management as well as data

extraction, but the open software cannot. Therefore, when free screening

software was suggested, it was likely also to find links to Zotero, EndNote,

and RefWorks, including notices about potential fees with these tools.

Depending on the availability of resources (e.g.,

personnel, time), librarians may choose to refer to other research guides

instead of creating their own informational or educational resources for a

research guide. For example, a librarian may prefer to link directly to the

commonly referenced video on YouTube prepared by the Cochrane Consumers and

Communication Group, La Trobe University, supported by Cochrane Australia

(Cochrane, 2016). Each librarian must decide if they should be referring to

other institutions or if they should create their own institution-specific

information. The latter would require additional support in terms of personnel.

An understaffed librarian may not have the time.

The data collected calculated the most referred to

systematic review research guide. It is interesting to note that Cornell

University, which owns the most referenced research guide, was not in the top

ten results of the incognito Google search. The present results lead to an

unanswered question: how do librarians determine which research guides to

reference? It is unknown whether they are using personal connections or a

simple Google search to find potential source research guides. In future

investigations, it might be possible to determine how librarians select or

evaluate the research guides to which they refer.

Coverage of Stages of Systematic Reviews

Creating a research guide also requires that the

authors determine how many informational or education resources to include.

Although Stone et al. (2018) demonstrated enhanced student learning when

resources were organized around the how and why of the research process, Lee et

al. (2021) has shown that systematic review research guides currently act as

information repositories sharing informational tools rather than educational

resources. Knowing their audience leads guide creators to decisions that drive

whether the systematic review research guide is a guide sharing few links with

limited information or an in-depth resource geared toward faculty. Regarding

protocol registration, the most minimal research guides provided links to OSF (https://osf.io/) and the PRISMA (http://www.prisma-statement.org/) websites. The most in-depth research guides provided information

related to the planning process for publication. If resources related to

publishing were shared, they were either near the research guide's beginning or

the end. The benefit of selecting a journal for publication early in the

process is to ensure that standards are adhered to. For example, protocol

registration or a specific standard such as Cochrane might be a requirement for

publication.

Librarians included many links to recommended

databases in the health sciences. It can be assumed that librarians feel most

confident discussing library resources and how to use them. The question of

depth of coverage relates to whether educational resources regarding these

databases were shared or whether strategies for creating a sensitive,

nonbiased, and reproducible search strategy were shared. Even in the conducting

searches stage, which had the highest maximal coverage in the research guides

studied, there were topics that librarians had omitted. For example, only

several research guides included commonly used hedges as well as resources such

as MeSH on Demand and the Yale MeSH Analyzer. Only

two research guides referred to antiquated and potentially offensive language.

The University of Michigan developed and included a note for authors on

antiquated and potentially offensive language (Townsend et al., 2022). It is

impossible to know why librarians omitted the information. Full-text article

retrieval was barely acknowledged, and this may be because librarians are

considering the information needs of the users when developing a guide. A

researcher working on a systematic review may already be aware of the steps

required to complete an interlibrary loan request.

It is unsurprising that the stages of a systematic

review least covered in the studied research guides included critical appraisal

and data extraction. These are two phases that typically require clinical or

topical expertise and which may be least representative of librarians’ skills.

It is equally unsurprising that the stages with the greatest coverage across

all surveyed research guides were the introductory, planning, guidelines and

reporting standards, conducting searches, and reference management stages.

Support Services Offered

In terms of services offered, the general trend was

three levels of service available. The first tier was consultation and

training. When other tiers of service were offered, this first tier was a

limited tier generally meant for graduate students. The librarian offered

consultation and training but would not take an active role. The mid-level tier

of service was a more active role which required acknowledgement in a published

paper. The highest level of service was the partnership role, which required

coauthorship in a published paper. The final data of services offered revealed

that 16% of surveyed institutions only offered the consultation and training

tier of service, 36.8% of institutions surveyed offered a two-tier system which

included the consultation and training tier as well as the coauthorship tier,

and 20.7% of institutions surveyed offered all three tiers of services.

Rethlefsen et al. (2015) demonstrated the value of a librarian as coauthor in

context of strengthening search strategies and search documentation. As

formalized systematic review services are a documented role of librarians

(Spencer and Eldredge, 2018), it is unsurprising to find 72.4% of research

guides reviewed offered some level of support service. Many institutions

offering the coauthorship tier of service also demonstrated their

qualifications in support of offering these services. But without training, the

initial question is whether a librarian is qualified to offer this service. Due

to a bottleneck in service, several institutions stated that services offered

were temporarily or permanently suspended, due to the overwhelming number of

projects in line, staff cuts, and impacts from the pandemic. In such cases, it

was most common to find those institutions offering only the consultation and

training level of service. Finally, 3 institutions (3.5%) offered fee-based

services for the coauthorship tier of service.

Potential Decisions Requiring Consideration

When creating or updating a systematic review research

guide, librarians must determine the balance of how much information and how

many tools to include. The decision must be driven by the needs of the patrons.

An academic institution with a special focus designation may have different

research needs than an institution designated with very high research activity

(R1). Librarians must ask themselves what the aim of the research guide is.

Several research guides provided no text description and many links to text

without context. Other research guides displayed a learning-centered pathfinder

design which told a “story” and explained in detail what a systematic review is

and the guidelines necessary to successfully complete one. Charrois (2015)

identified organizing potential studies during the search stage as a

difficulty. An informational link to citation management software can lead to a

solution for a researcher, while an educational resource to this same tool can

be the solution. The organization of information is as important as the

information provided. Librarians must also determine how they will maintain the

research guide. Regardless of educational tools created or referral links to

external resources provided, librarians must consider how they will maintain

the accuracy and currency of the content shared.

Once the librarian has identified their audience,

there are necessary decisions for a librarian to make before creating the

research guide. Of the research guides surveyed, only 66% mentioned the

required timeline and team necessary to complete a systematic review. These

results are significant in recognizing the role of the academic librarian in

the systematic review process. Additional research is needed to better

understand the role librarians play in supporting systematic reviews (e.g.,

informing researchers that their projects are unlikely to be successful in the

time allotted).

Limitations

This project was limited in scope to only research

guides from U.S. institutions as well as research guides in the English

language. This researcher acknowledges that there is great work coming out of

other regions of the world, but it is beyond the scope of this paper to review

all systematic review library research guides from elsewhere in the world. The

search was also limited to an incognito search in Google for research guides

covering systematic reviews. Research guides, regardless of platform or provider,

were included. While these results did capture LibGuides on the SpringShare

platform, SpringShare’s community site was not searched for existing LibGuides.

While this is a survey of systematic review guides,

this is not a systematic review of research guides. At best, this is a

systematized review. The research team did not include two independent

screeners and data extractors of the research guides screened and data

collected, which could potentially introduce bias. The accessibility,

usability, and the quality of included educational and informational resources

were not appraised. A future step would be to determine an ideal gold standard

of systematic review library research guides.

Conclusion

In this content analysis review, the author surveyed

systematic review library research guides to determine considerations and

decisions that academic librarians face when creating and updating these

research guides and systematic review support services. Frequently used tools,

software, guidelines, and standards frequently referenced in the research

guides were highlighted. Although this study focused on systematic review

research guides in the United States, the author attempted to minimize bias

using an incognito search in Google and hand searching to discover as many

systematic review research guides as possible that met the inclusion criteria.

In conclusion, a systematic review library research

guide is not the type of research guide that you can create and forget about.

Tools, resources, services, and even the theory may develop over time. For

example, commonly used tools and resources to screen results or extract data

will change as artificial intelligence and machine learning continue to

develop. Potential recommendations for librarians developing or updating

systematic review research guides or support services include continual

training and work in the field as well as maintaining familiarity with all

resources and tools linked in the research guide.

References

American Council on Education. (2024). Carnegie classifications of

institutions of higher education. https://carnegieclassifications.acenet.edu/index.php

Bergstrom-Lynch, Y. (2019). LibGuides by design: Using instructional

design principles and user-centered studies to develop best practices. Public

Services Quarterly, 15(3), 205–223. https://doi.org/10.1080/15228959.2019.1632245

Beverley, C. A., Booth, A., & Bath, P. A. (2003). The role of the

information specialist in the systematic review process: A health information

case study. Health Information and Libraries Journal, 20(2), 65–74. https://doi.org/10.1046/j.1471-1842.2003.00411.x

Bullers, K., Howard, A. M., Hanson, A., Kearns, W. D., Orriola, J. J.,

Polo, R. L., & Sakmar, K. A. (2018). It takes longer than you think:

Librarian time spent on systematic review tasks. Journal of the Medical

Library Association, 106(2), 198–207. https://doi.org/10.5195/jmla.2018.323

Chalmers, I., & Fox, D. M. (2016). Increasing the incidence and

influence of systematic reviews on health policy and practice. American

Journal of Public Health, 106(1), 11–13. https://doi.org/10.2105/AJPH.2015.302915

Charrois, T. L. (2015). Systematic reviews: What do you need to know to

get started? The Canadian Journal of Hospital Pharmacy, 68(2), 144–148. https://doi.org/10.4212/cjhp.v68i2.1440

Cochrane. (2016, January 27). What are systematic reviews?

[Video]. YouTube. https://www.youtube.com/watch?v=egJlW4vkb1Y

Cochrane. (2020, January 3). Evidence synthesis - What is it and why

do we need it? https://www.cochrane.org/news/evidence-synthesis-what-it-and-why-do-we-need-it

Cornell University Library. (2023, May 19). A guide to evidence

synthesis: Types of evidence synthesis. https://guides.library.cornell.edu/evidence-synthesis/types

Grant, M. J., & Booth, A. (2009). A typology of reviews: An analysis

of 14 review types and associated methodologies. Health Information &

Libraries Journal, 26(2), 91–108. https://doi.org/10.1111/j.1471-1842.2009.00848.x

Harwood, T. G., & Garry, T. (2003). An overview of content analysis.

Marketing Review, 3(4), 479–498. https://doi.org/10.1362/146934703771910080

Hoffmann, F., Allers, K., Rombey, T., Helbach, J., Hoffmann, A., Mathes,

T., & Pieper, D. (2021). Nearly 80 systematic reviews were published each

day: Observational study on trends in epidemiology and reporting over the years

2000-2019. Journal of Clinical Epidemiology, 138, 1–11. https://doi.org/10.1016/j.jclinepi.2021.05.022

Kim, I., & Kuljis, J. (2010). Applying content analysis to web-based

content. Journal of Computing and Information Technology, 18(4),

369–375. https://doi.org/10.2498/cit.1001924

Koffel, J. B. (2015). Use of recommended search strategies in systematic

reviews and the impact of librarian involvement: A cross-sectional survey of

recent authors. PLoS ONE, 10(5), e0125931–e0125931. https://doi.org/10.1371/journal.pone.0125931

Lackey, M. J., Greenberg, H., & Rethlefsen, M. L. (2019). Building

the systematic review core in an academic health sciences library. Journal

of the Medical Library Association, 107(4), 588–594. https://doi.org/10.5195/jmla.2019.711

Laynor, G. (2022). Can systematic reviews be automated? Journal of

Electronic Resources in Medical Libraries, 19(3), 101–106. https://doi.org/10.1080/15424065.2022.2113350

Lee, J., Hayden, K. A., Ganshorn, H., & Pethrick, H. (2021). A

content analysis of systematic review online library guides. Evidence Based

Library and Information Practice, 16(1), 60–77. https://doi.org/10.18438/eblip29819

Li, L., Tian, J., Tian, H., Moher, D., Liang, F., Jiang, T., Yao, L.,

& Yang, K. (2014). Network meta-analyses could be improved by searching

more sources and by involving a librarian. Journal of Clinical Epidemiology,

67(9), 1001–1007. https://doi.org/10.1016/j.jclinepi.2014.04.003

Marshall, C., Sutton, A., O'Keefe, H., Johnson, E. (Eds.). (2022). The

systematic review toolbox. http://www.systematicreviewtools.com/

Meert, D., Torabi, N., & Costella, J. (2016). Impact of librarians

on reporting of the literature searching component of pediatric systematic

reviews. Journal of the Medical Library Association, 104(4), 267–277. https://doi.org/10.3163/1536-5050.104.4.004

Murphy, S. A., & Boden, C. (2015). Benchmarking participation of

Canadian university health sciences librarians in systematic reviews. Journal

of the Medical Library Association, 103(2), 73–78. https://doi.org/10.3163/1536-5050.103.2.003

Rethlefsen, M. L., Farrell, A. M., Osterhaus Trzasko, L. C., & Brigham,

T. J. (2015). Librarian co-authors correlated with higher quality

reported search strategies in general internal medicine systematic reviews. Journal

of Clinical Epidemiology, 68(6), 617–626. https://doi.org/10.1016/j.jclinepi.2014.11.025

Spencer, A. J., & Eldredge, J. D. (2018). Roles for librarians in

systematic reviews: A scoping review. Journal of the Medical Library

Association, 106(1), 46–56. https://doi.org/10.5195/jmla.2018.82

Stone, S. M., Lowe, M. S., & Maxson, B. K. (2018). Does course guide

design impact student learning? College & Undergraduate Libraries, 25(3),

280–296. https://doi.org/10.1080/10691316.2018.1482808

Toews, L. (2019). Benchmarking veterinary librarians’ participation in

systematic reviews and scoping reviews. Journal of the Medical Library

Association, 107(4), 499–507. https://doi.org/10.5195/jmla.2019.710

Townsend, W., Anderson, P., Capellari, E., Haines, K., Hansen, S.,

James, L., MacEachern, M., Rana, G., & Saylor, K. (2022). Addressing

antiquated, non-standard, exclusionary, and potentially offensive terms in

evidence syntheses and systematic searches. https://doi.org/10.7302/6408

Tsafnat, G., Glasziou, P., Choong, M. K., Dunn, A., Galgani, F., &

Coiera, E. (2014). Systematic review automation technologies. Systematic

Reviews, 3(1), 74. https://doi.org/10.1186/2046-4053-3-74

Wang, S., Scells, H., Koopman, B., & Zuccon, G.

(2023). Can ChatGPT write a good Boolean query for systematic

review literature search? https://doi.org/10.48550/arxiv.2302.03495

White, M. D., & Marsh, E. E. (2006). Content Analysis: A Flexible

Methodology. Library Trends, 55(1), 22–45. https://doi.org/10.1353/lib.2006.0053

Yoon, A., & Schultz, T. (2017). Research data management services in

academic libraries in the US: A content analysis of libraries’ websites.

College & Research Libraries, 78(7), 920-933. https://doi.org/10.5860/crl.78.7.920