Who’s Publishing Systematic Reviews? An Examination Beyond the Health Sciences

Maribeth Slebodnik

Librarian & Liaison to the College of Nursing

Arizona Health Sciences Library

University of Arizona

Tucson, AZ

slebodnik@arizona.edu

Kevin Pardon

Associate Liaison Librarian

ASU Library

Arizona State University

Phoenix, AZ

kevin.pardon@asu.edu

Janice Hermer

Associate Liaison Librarian

ASU Library

Arizona State University

Phoenix, AZ

janice.hermer@asu.edu

Abstract

The number of systematic reviews (SRs) published continues to grow, and the methodology of evidence synthesis has been adopted in many fields outside of its traditional health sciences origins. SRs are now published in fields as wide ranging as business, environmental science, education, and engineering; however, there is little research looking at the nature and prevalence of non-health sciences systematic reviews (non-HSSRs). In this study, a large sample from the Scopus database was used as the basis for analyzing SRs published outside the health sciences. To map the current state of non-HSSRs, their characteristics were investigated and the subject areas publishing them determined. The results showed that a majority of the non-HSSRs examined were lacking at least one characteristic commonly expected in health sciences systematic review (HSSRs) methodology. The broad subject areas publishing non-HSSRs fall mostly within the social sciences and physical sciences.

Keywords: Systematic reviews, Evidence synthesis, Standards

Slebodnik, M., Pardon, K., & Hermer, J. (2022). Who’s publishing systematic reviews? An examination beyond the health sciences. Issues in Science and Technology Librarianship, 101. https://doi.org/10.29173/istl2671

Introduction

While originally published in health sciences and related fields, systematic reviews (SRs) are now commonly published in other disciplines. The number of SRs published annually has also exploded in recent decades. As part of this rise in popularity, there have been thousands of SRs added to the academic literature (Niforatos et al., 2019), and recent research demonstrates continuing growth in the number of SRs published (Hoffmann, 2021). In this article, we examine the current landscape of SRs published outside the health sciences and their characteristics.

Uman (2011) states that “systematic reviews, as the name implies, typically involve a detailed and comprehensive plan and search strategy derived a priori, with the goal of reducing bias by identifying, appraising, and synthesizing all relevant studies on a particular topic” (p. 57). SRs are generally conceived and written to provide a high level of evidence on a particular topic by gathering a comprehensive collection of relevant literature and synthesizing the assembled evidence. In health sciences, this can mean finding all available reports investigating the same intervention and analyzing them to provide a synthesis of available evidence related to a specific research question. The aggregated results increase the level of evidence and confidence related to an intervention or conclusion. By following the stringent standards required by an SR, bias is reduced, and the best current evidence can be distilled into a clear answer that evaluates the harms and benefits of medical interventions (Clarke & Chalmers, 2018). In disciplines where assessing evidence for clinical use is not common, the meaning and determination of a “high level of evidence” may be quite different, and the process for gathering and assessing evidence may vary.

Foster and Jewell (2017) lists a number of organizations performing SRs in disciplines such as business, education, international development, public policy, ecology, environmental sciences, and engineering. Standards and guiding documents developed for disciplines outside the health sciences show divergence from SR standards such as Cochrane and Joanna Briggs (Aromataris & Munn, 2020; Higgins et al., 2019). For example, an article by Tranfield et al. (2003) explicitly compares and contrasts the standards required for Cochrane reviews to those appropriate for SRs in the management field, pointing out that the aims and methods of management research are not well matched to the aims and methods used in the medical field. Tranfield et al. (2003) notes the need to produce rigorous research that relies more on qualitative methods and looks for practical solutions, recognizing that some principles of SR research can enhance the rigor of management research. One distinct difference is that research questions in management research are often developed or refined after the literature review has been completed, in contrast to pre-determined research questions stressed strongly in health sciences SRs (Tranfield et al., 2003). Examples of fields for which specific standards and guiding documents have been developed include software engineering, (de Almeida Biolchini et al., 2007; Kitchenham & Brereton, 2013) environmental science and conservation (Collaboration for Environmental Evidence [CEE], 2018), management (Tranfield et al., 2003), and social sciences (Petticrew & Roberts, 2006).

Objectives

While recognizing that the methods implemented may vary slightly, we assume that the intent to find and appraise relevant research remains universal to all scholars writing an SR regardless of discipline. We aim to examine published non-health sciences systematic reviews (non-HSSRs) to investigate the ways in which the characteristics of non-HSSRs are similar to or different from the characteristics of health sciences systematic reviews (HSSRs). We sought to describe approaches and methods to undertaking an SR, not to critique or appraise the quality of the resulting non-HSSRs. Each non-HSSR author’s description of adherence to HSSR characteristics was taken at face value, and the authors of this study focused on tabulating the identifiable characteristics described in these non-HSSRs. Characteristics of SRs that were examined include predetermined criteria for inclusion and exclusion, adherence to prescribed standards and guiding documents, a reproducible search strategy, systematic searching of databases and resources, and the presence of librarian expertise (Aromataris & Munn, 2020; Higgins et al., 2019; Institute of Medicine, 2011). The objectives of this paper are:

- Investigate the characteristics of non-health sciences systematic reviews.

- Determine the disciplines outside the health sciences that are publishing systematic reviews.

Methods

We conducted a search for SRs published in disciplines outside of the health sciences indexed in the Scopus database. We chose Scopus due to its extensive content across disciplines and inclusion of SRs (Vinyard & Whitt, 2016). Scopus provides broad subject areas and narrower subject area classifications for each journal, allowing SRs in this sample to be roughly grouped by discipline. According to a recent study (Baas et al., 2020), approximately 31% of the serial titles indexed in Scopus are from the health sciences subject area category, while journals in the other three main subject area categories, physical sciences (28%), social sciences (26%), and life sciences (15%) have somewhat smaller percentages of representation.

Search Strategy

A broad phrase search of the Scopus database using “systematic review” in either the article title or abstract with no date limit was performed in September 2017 and rerun in May 2019 to capture articles published during the interim period. This search phrase was selected because it is the most commonly used terminology to describe an SR and provided a large representative sample. In Scopus, a phrase enclosed by double quotation marks (“”) is a loose or approximate search, so spelling variants and plurals are returned in the results, while a phrase enclosed in curly brackets or braces ({}) is an exact search, and only the exact string is searched (Elsevier B.V., 2021b).

Health sciences articles were excluded using the Scopus subject area categories limit for the following subjects: biochemistry, dentistry, health sciences, immunology, medicine, neurosciences, nursing, pharmacology, psychology, and veterinary medicine. Several of these subject categories that were not clearly health sciences-focused such as biochemistry, neurosciences, and psychology were individually examined. From an examined sample, a clear majority of the articles tagged with these categories were about human or animal health topics and thus the subject areas were excluded. Non-review articles were excluded by selecting the document type limit “review.” The source type limit “journal” was applied to eliminate non-journal articles. Citations were sorted into subject area categories and placed into labeled groups in EndNote. Each citation was then tagged with all associated subject area classifications per Scopus. All citations were downloaded into Excel for screening by title and abstract, and then by full text.

The complete Scopus search strategy is:

(TITLE("systematic review") OR ABS("systematic review")) AND ( LIMIT-TO ( DOCTYPE,"re" ) ) AND ( EXCLUDE ( SUBJAREA,"MEDI" ) OR EXCLUDE ( SUBJAREA,"BIOC" ) OR EXCLUDE ( SUBJAREA,"NURS" ) OR EXCLUDE ( SUBJAREA,"HEAL" ) OR EXCLUDE ( SUBJAREA,"PSYC" ) OR EXCLUDE ( SUBJAREA,"NEUR" ) OR EXCLUDE ( SUBJAREA,"PHAR" ) OR EXCLUDE ( SUBJAREA,"DENT" ) OR EXCLUDE ( SUBJAREA,"IMMU" ) OR EXCLUDE ( SUBJAREA,"VETE" ) ) AND ( LIMIT-TO ( SRCTYPE,"j" ) )

This resulted in 3,363 citations that were subjected to title and abstract screening, followed by full-text screening using the following inclusion and exclusion criteria:

- Inclusion

- Includes “systematic review” in the title or abstract

- Non-health sciences topic

- Exclusion

- Articles encompassing anything that has to do with human and animal health, illness, treatments, or interventions

- Non-English language

- Described as a study type other than an SR in the abstract or text of the article

Screening

Titles and abstracts for each article were screened using both our inclusion and exclusion criteria. Each of the three reviewers was assigned an equal portion of the 3,363 citations to screen by applying the inclusion and exclusion criteria. Each citation was examined by two reviewers in the title/abstract screening phase and one reviewer during the full-text screening phase. If an article included one or more of the exclusion criteria it was removed. The screening process eliminated 1,655 citations. After title/abstract screening, the dataset included 1,708 SRs outside the health science disciplines. An additional 51 articles were eliminated during the full-text screening resulting in 1,657 full-text articles that were analyzed.

Data Collection

Characteristics of Non-Health Sciences Systematic Reviews

To address the first research objective, data from each article in the final dataset was collected using a customized Google Form. The data collected for each article included a unique label number, the evaluator’s name, answers to five questions about SR characteristics, and a notes field for each question. The five questions were:

- Are inclusion or exclusion criteria specified?

- Is a systematic review standard or other guiding document cited as the basis for the research methods?

- Is a search strategy included?

- Are databases or other search sources specified?

- Was a librarian involved in the SR process?

The three team members independently collected answers to the five questions for one third of the full-text articles. More detailed data or observations were entered as text in the notes field. An additional notes field at the end of the Google Form provided a place to record notes that were not connected to a particular question. Prior to beginning data collection, the three team members examined the same set of 30 full-text articles and compared the resulting data as a norming exercise. Based on this norming exercise, the form was revised, and a detailed data collection guide was written to ensure shared understanding and consistency. Although an interrater reliability score was not calculated, reviewers discussed each discrepancy during norming to insure consistent screening. If any of the co-authors were uncertain about the answer to a question, it was flagged and resolved by discussion and consensus among all three members. For each of the five questions, we established conditions that determined whether to code the answer to a question as a “Yes” or a “No.”

Inclusion and Exclusion Criteria

The first question asked whether each article we found had inclusion and/or exclusion criteria identified. An article was coded as a “Yes” if a single inclusion or exclusion criterion was mentioned. Articles were not required to contain both inclusion and exclusion criteria. When no criteria were clearly labeled, the paper was reviewed for any use of the words “exclude,” “include,” “exclusion,” or “inclusion” to determine if inclusion or exclusion criteria were specified in a less formal manner or described as part of the methods elsewhere.

Systematic Review Standards or Other Guiding Documents

If an article cited an SR standard or guiding document, it was typically found in the introduction or the methods section. If not found there, the full text of the article was scanned to identify a standard or other guiding document. Guiding documents were broadly defined as any document, article, standard, protocol, etc. that was cited as the premise for the author’s methodology or used as the basis for the SR. For example, the PRISMA statement describes its purpose as a guide for reporting SRs and meta-analyses, not as a standard or guideline for conducting them (Moher et al., 2009). Nonetheless, it is frequently cited in both health sciences and non-HSSRs in place of a standard such as those developed by the Joanna Briggs Institute or Cochrane (Aromataris & Munn, 2020; Higgins et al., 2019). If specific standards or guiding documents were cited, the article was coded as a “Yes.” If we were unable to verify the use of a specific standard or guiding document, the answer was coded as “No.” Articles that referred to another SR as their “standard,” were also coded as a “Yes” because this met our definition of guiding document. Articles that mentioned the development of their own standard but did not refer to any sort of external document as the basis for this self-developed standard were coded as a “No.”

In addition to tracking whether a standard was used, we also tracked which specific standards and guiding documents were referenced. The authors of named standards or guiding documents were recorded in the notes field. If an author cited their own previously published SR as an exemplar article or standard, we noted that fact in the notes field as “own article.” Lastly, articles that listed more than one standard or equivalent guiding document were coded as “multiple” in the notes field. The number of standards or guiding documents cited were noted and the primary author of each was recorded.

Search Strategy

Regarding the presence or absence of a search strategy, a “Yes” was recorded if the article described at least one search strategy. In the PRESS 2015 Guideline Statement (McGowan et al., 2016), the six search strategy components are defined as:

- Translation of the research question into the search strategy

- Use of Boolean and proximity operators

- Use of subject headings

- Text word searching in addition to subject headings

- Correct spelling, syntax, and line numbers

- Limits and filters

While we did not strictly require those six search strategy components, we did expect to find a reasonable facsimile of a search strategy. Thus, if a search strategy was present, whether a single word or a full search string with Boolean operators, the answer was coded as a “Yes.” Articles in which a search process was clearly described in a text paragraph rather than by displaying the search strategy were also coded as a “Yes,” regardless of the perceived correctness of the search process. Articles that failed to define a search strategy or lacked any information about how the search was undertaken, were coded as a “No.” Articles that listed the keywords used but made no attempt to describe how they were combined were also coded as “No.” These articles were tracked by entering “keywords” in the notes field.

Databases and Other Search Sources

For identification of databases or sources searched, any mention of specific (or named) databases or other resources were sought. This included databases, as well as search aggregators, journals, websites, or archives. If resources were described by name, then the answer was coded as a “Yes.” Simply stating that unnamed resources had been searched was coded as a “No.” For articles in which named databases were described, the number of databases searched was indicated in the notes field. Google Scholar was accepted as a database for the purposes of this study, as were federated search tools. Some researchers described vendors such as ProQuest or EBSCOHost as databases. These usually include multiple databases, though without more information these were counted as one item per named resource. Articles describing a search of only journals, websites, or other resources were also coded as “Yes.”

Librarian Participation

If the contributions of a named or anonymous librarian/information professional were described in an article, the answer was coded as “Yes” for librarian participation. If no mention of a librarian, information professional, or any other equivalent role could be located then it was coded as “No.” We searched for evidence of librarian involvement by examining author credentials, as well as explicit description of a librarian’s work in the methods section and mention of contributions in the acknowledgements. If that was not successful, we searched the entire full text for the string “librar” to locate any other mentions of librarians or information professionals in the article.

Disciplines Outside Health Sciences Publishing Systematic Reviews

To address the second research objective, Scopus subject areas and subject area classifications were examined and tabulated. Each journal indexed in Scopus is assigned up to three of four potential subject areas (physical sciences, health sciences, life sciences, or social sciences). Journals are also assigned multiple subject area classifications, which are more granular subdivisions within the broader subject areas and are based on the aims and scope of the journal and the content it publishes. The subject areas and subject area classifications are not ranked when assigned by Scopus. Since the subject areas and classifications are assigned to the journal that an article is published in, not the article itself (Elsevier B.V., 2021c), the broad subject areas and subject area classifications do not necessarily describe the article level topics and are only a rough estimate of the disciplines to which the articles in the dataset belong.

Data Cleanup

The individual data from the final set of 1,657 articles were collected in an Excel spreadsheet and examined to ensure that data was consistent and complete. Data for each of the five questions about SR characteristics were tabulated for analysis. For example, if an article was recorded as having an SR standard or guideline, we verified that the guideline title or author was included in the corresponding notes field. Lastly, we categorized the final set of citations using the assigned subject areas and subject area classifications. For consistency, when data is presented as percentages, the results were rounded to the nearest whole number.

Results

Characteristics of Non-Health Sciences Systematic Reviews

The five questions about SR characteristics stated in the Methods section were used to examine the components of the first research objective: “Investigate the characteristics of non-health sciences systematic reviews.” The aggregated results for all five questions are displayed in Figure 1.

Inclusion and Exclusion Criteria

The presence of inclusion and exclusion criteria are an important component of SRs, because they allow the authors to clearly define and identify which articles will be included in the review. For this question, 1,075 articles (65%) were coded as a “Yes,” while 582 articles (35%) were coded as a “No” and did not explicitly mention any sort of inclusion or exclusion criteria.

Systematic Review Standards or Other Guiding Documents

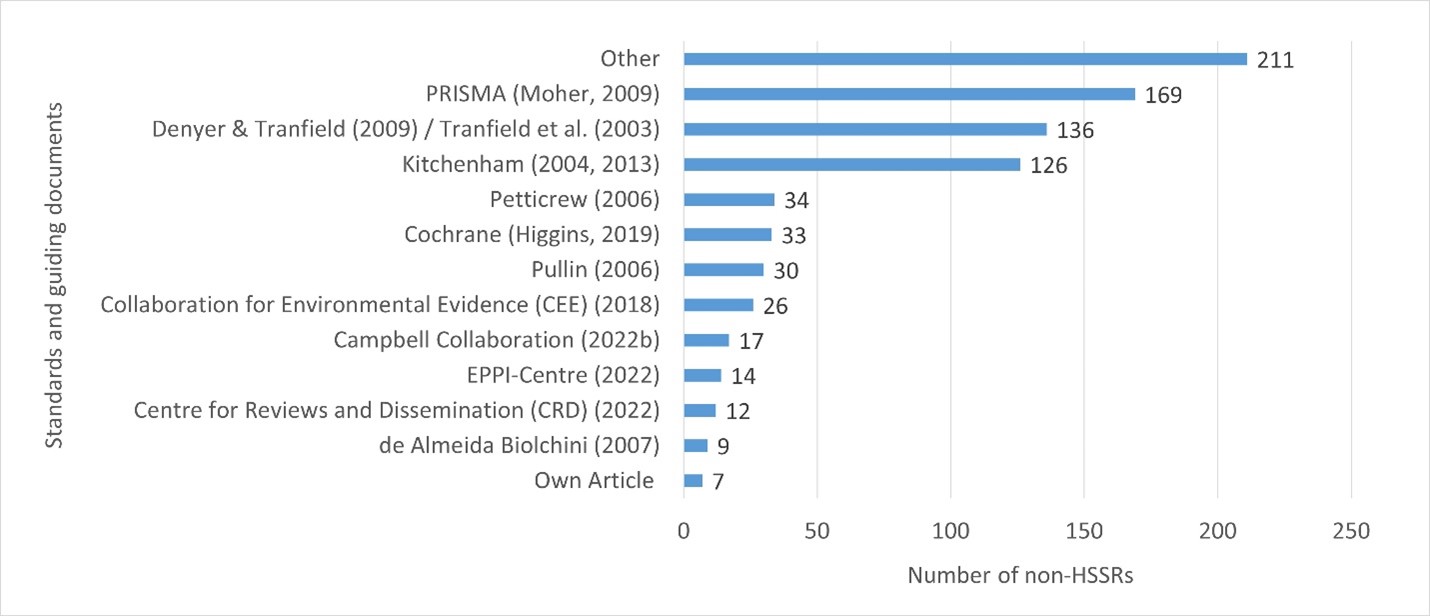

Citing an established, published SR standard or guiding document is an indication that an SR was designed to follow an accepted and transparent process. There were 764 articles (46%) coded as a “Yes” and 893 articles (54%) were coded as a “No.” Of the articles that cited a standard or guiding document, 520 articles cited one, 239 articles cited two to five, and 5 articles cited six or more standards or guiding documents.

As shown in Figure 2, the most cited standards or guiding documents were PRISMA (169 or 22%), Tranfield (136 or 18%), and Kitchenham (126 or 16%). The three most cited standards have published several versions, which were combined in the results. Those standards that are not referenced elsewhere in this article include the EPPI-Centre, for “informing policy and professional practice with sound evidence,” and the Centre for Reviews and Dissemination (CRD), which specializes in SRs and associated economic analyses for health sciences, as well as development of evidence synthesis methodology (Centre for Reviews and Dissemination, 2022; Evidence for Policy and Practice Information and Co-ordinating Centre, 2022). The standards or guiding documents cited in less than 7 articles are aggregated in the “Other” category (211 or 28%). The majority of items in the “Other” category were individual references to exemplar articles and are not displayed in Figure 2. Complete references to named SR standards or guiding documents shown in Figure 2 are listed in the Appendix.

Search Strategy

The presence of a well-crafted SR search strategy allows for reproducibility of the dataset for other researchers. After analysis, 655 articles (40%) received a “Yes” response and 1,002 (60%) articles in our dataset received a “No” response to this question. Keywords without context were marked as “No,” but a note was made in the notes field. Of the 1,002 articles coded as “No,” 487 (29%) articles listed keywords with no mention of how they were combined.

Databases and Other Resources

Specifying which databases or resources were searched for an SR is an indication that the intention to be “systematic” is present in some form and enables reproducibility and transparency. There were 1,271 (77%) articles that specified the resources searched and were coded as a “Yes,” and 386 (23%) articles were coded as a “No.” Of the 1,271 articles that described the resources searched, 1,231 articles identified the databases used for their SR. Forty articles (3%) did not search any databases, but instead searched specific journals, or in a few cases, conference proceedings, websites, or other miscellaneous sources of information. The number of databases used varied, with around 20% of articles using only one database, and about 80% of articles using two or more databases, as displayed in Figure 3.

Librarian Involvement

Our fifth question explored the topic of librarian involvement in SRs. Within health sciences librarianship, it is acknowledged that involvement of a librarian trained in evidence synthesis methods positively impacts the quality of an SR (Koffel, 2015; McGowan & Sampson, 2005; Rethlefsen et al., 2014; Rethlefsen et al., 2015). Sixty (4%) of the examined SRs acknowledged the work of a librarian in their review process. The remaining 1,597 articles (96%) made no mention of a librarian or information professional in the author credentials, main text, or acknowledgements.

Disciplines Outside the Health Sciences Publishing Systematic Reviews

To investigate the second research objective involving the disciplines outside the health sciences publishing SRs, we tabulated the subject areas and subject area classifications captured in the final dataset. Many journals are coded with multiple subject areas and subject area classifications, which greatly complicated this task. The three broad subject areas of social sciences, physical sciences, and life sciences were assigned 2,102 times to the journals that published the 1,657 articles in our final dataset. Eighteen subject area classifications were assigned 2,803 times to the 1,657 articles in our final dataset (Figure 4). Of the 1,657 articles, 779 (47%) were assigned at least two subject area classifications. Scopus has both a broad subject area for social sciences and a subject area classification for social science. Figure 4 refers to the subject area classification, social science, rather than the broad subject area, social sciences. Primary subject areas (n=2,102) were tabulated for the articles (n=1,657) in the final dataset: physical sciences (1,025), social sciences (871) and life sciences (206).

Discussion

The results of our analysis showed that non-HSSRs do not usually follow all of the guidelines recommended for HSSRs, and most of the non-HSSRs did not consistently conform to all of the five SR characteristics examined. While concerning, it is equally important to acknowledge that many HSSRs also do not follow all of the guidelines for conducting an SR (Jacobsen et al., 2021; Katsura et al., 2021). The characteristics most frequently found in non-HSSRs were inclusion/exclusion criteria and the databases searched. The findings also corroborate other recent studies (Hoffmann et al., 2021; Kocher & Riegelman, 2018) showing SRs are being published in many subject areas outside the health sciences.

Characteristics of Non-Health Sciences Systematic Reviews

Inclusion and Exclusion Criteria

There is awareness of the importance of inclusion/exclusion criteria as the majority of articles (65%) specified inclusion and/or exclusion criteria. In our dataset, only the characteristic of specifying databases (77%) was implemented at a greater rate. However, the absence of inclusion or exclusion criteria in over a third (35%) of the dataset is concerning. A lack of inclusion and exclusion criteria means it is unclear why the authors of an SR decided to include certain articles, while excluding others. The risk of publication bias increases when inclusion and exclusion criteria are not predetermined (Page et al., 2014; Redulla, 2016).

Systematic Review Standards or Other Guiding Documents

The use of methods dictated by accepted SR standards or guiding documents inform the design of an SR protocol that will achieve the goal of transparency (Brackett & Batten, 2020). Nearly half of these SRs did not report the use of any guiding document or standard in the design of their review protocol. The lack of such guidance calls the rigor of the study into question, and the use of a guiding document (such as another SR) instead of an established standard (see “Other” results in Figure 2) also suggests a less rigorous approach to the SR process (Brackett & Batten, 2020). We did not examine the guiding documents themselves to see if they were based on an established standard, so it is possible that some of the SRs based their research process on a guiding document that had no reason to be used as such. It is also interesting to note that many authors (244 or 32%) in this study report the use of multiple standards and guiding documents, for which the reason is unclear.

Additionally, some SR standards, such as Kitchenham and colleagues’ standards for SRs in software engineering (2004; 2013), were developed for use in a specific field or subject area and are not necessarily suitable for SRs in other disciplines. This study did not evaluate whether the standard or guiding document used for an individual article was appropriate to the subject area, so it is possible that some articles followed a standard that was not designed for their discipline. The use of SR standards intended for specific fields in alternate areas of research may reflect the lack of guidance available, perhaps leading researchers to use what they could find.

Robust SR standards exist for many disciplines. Formed in 2000, the Campbell Collaboration “is an international social science research network that produces high quality, open and policy-relevant evidence syntheses, plain language summaries and policy briefs” (Campbell Collaboration, 2022a, para. 1). The Campbell Collaboration has published the Methodological Expectations of Campbell Collaboration Intervention Reviews (MECCIR) for social science fields, as well as a set of conduct and reporting standards based on the Cochrane standards (Campbell Collaboration, 2022b). MECCIR is used to guide the publication of Campbell SRs and gap maps. Environmental science has also developed a robust set of guidelines for conducting evidence synthesis. Version 5.0 of the Guidelines and Standards for Evidence Synthesis in Environmental Management is currently disseminated on the Collaboration for Environmental Evidence website (2018) and has been translated into Chinese, Japanese, and Spanish.

Several of the most frequently used non-HSSR standards such as Tranfield et al. (2003) and Denyer and Tranfield (2009), as well as Kitchenham and colleagues (2004; 2013) have published multiple versions, making it clear that the authors updated and expanded upon their original guidelines. Figure 3 tabulates the occurrences of multiple versions of standards in our dataset. These updates may reflect the rapid change occurring in SR methods, improved understanding of the SR process, or refinement of new processes to better reflect the needs of other areas of research.

Search Strategy

While 40% of the articles included some sort of search strategy, neither the quality nor the replicability of the search strategies was evaluated. Observed search strategies ranged in complexity from a single keyword to a more complex strategy with subject headings, keywords, and Boolean operators. Even though many articles included a “search strategy” using predetermined criteria, the percentage of articles that included a comprehensive and replicable search string is likely to be much lower.

In our sample, 29% of the SRs examined listed keywords but failed to describe how the keywords were combined. While including a list of keywords may represent progress towards a reproducible search strategy, in practice the difference between articles that include a list of keywords only and those that do not mention keywords at all is minimal. Additional education about how to describe one’s search strategy and recommending more widespread use of the PRISMA for Searching extension, a checklist specifically for SR literature searches, may be needed (Rethlefsen et al., 2021).

Databases and Other Resources

It is generally accepted that multiple databases are selected and searched for SRs (Bramer et al., 2017) in order to comprehensively collect the evidence that answers the research question (Batten & Brackett, 2021). This standard was generally adhered to in this sample, as evidenced by resources being specified at a greater percentage than any other of the indicators we examined. Only 23% of the non-HSSRs did not specify databases or resources searched. The authors did not evaluate the quality of databases chosen since the authors are not subject experts in non-health sciences disciplines.

The non-HSSR’s that used only journals and no databases were typically identified with social science, business, computer science, or environmental science subject area classifications. In most cases, it was unclear why databases were not used and why searching individual journals was considered the appropriate choice. A few authors stated that searching the top journals in their field was the best option to find targeted articles, but in most cases these authors also searched databases with specific journals added to enhance the search strategy.

One in five of the articles that identified databases used only one database for their search. While there is no right number of databases to use, within HSSRs it is generally accepted that to be comprehensive, multiple sources need to be carefully selected and searched (Batten & Brackett, 2021; Bramer et al., 2017; Lam & McDiarmid, 2016). Searching a single database or resource is generally not enough to guarantee the comprehensiveness of a literature search; it is possible that the authors of the non-HSSRs who used only one database were not aware of the value or rationale for using multiple databases. The large number of SRs that searched only one database was further complicated by researchers not providing specific details on the databases they used, instead listing platforms such as ProQuest, EBSCOHost, or Web of Science. Many of these resources allow users to search multiple databases at once or depending on one’s institution, gain access to different collections within a single database. Without additional context, simply listing a platform like EBSCOhost or ProQuest is not useful for replicability purposes. Researchers may not be aware that their institution’s access to specific segments of a platform will differ from that at another institution depending on the details of each library’s subscription.

Librarian Participation

When librarians are co-authors on SRs they typically serve as project managers and are almost always the experts in database searching; therefore, the lack of librarian involvement in the examined SRs does not bode well for the quality of the final products (Rethlefsen et al., 2015). In health sciences, librarian participation is typically an expectation though often not fulfilled. It is a requirement of the Institute of Medicine standards (Institute of Medicine, 2011) and strongly recommended by other standards such as Cochrane and the Joanna Briggs Institute (Aromataris & Munn, 2020; Higgins et al., 2019). Reports of librarian involvement in health sciences SRs range from 6% (Li et al., 2014) to 51% (Koffel, 2015). The results of this study (Figure 1) suggest that outside health sciences, librarian involvement is even less common than it is within health sciences. It is possible that acknowledging librarian participation is not the norm in both HSSRs and non-HSSRs. This also raises some interesting questions about whether non-medical academic librarians, the population most likely to be called on to participate in non-HSSRs, are trained, prepared, and able to gain the experience needed to participate in these demanding projects.

Several academic libraries in the United States, notably those of Carnegie Mellon University, Cornell University, and the University of Minnesota, provide librarian support for non-HSSRs (Cornell University Library, 2022; Kocher & Riegelman, 2018). Many academic health centers have developed programmatic responses to the need for trained librarians on SR teams, but outside that environment, the response appears to be still gathering steam. In some cases, health sciences libraries are reprioritizing their service portfolios to redirect efforts or add personnel for supporting SR services, realizing that it is increasingly being demanded by their clientele (Kallaher et al., 2020; Ludeman et al., 2015; Roth, 2018). Given the financial constraints that libraries are currently and perennially facing, adding a labor-intensive service to their librarians’ responsibilities may not be realistic.

Of the 60 SRs that report the involvement of a librarian, almost half of them were also coded with “Yes” answers to the other four questions about SR characteristics. Only one article reported librarian involvement and answered “No” to all the other questions. This suggests that librarian involvement may predict a higher level of conformance to the characteristics commonly found in SRs, or that teams with greater knowledge of SR standards may be more likely to enlist the assistance of a librarian. The lack of librarian involvement overall may also suggest that researchers are unaware of librarian expertise in SRs or may not have access to librarians with SR training and experience. Librarians can assist researchers with core requirements such as ensuring the reproducibility of the search, selecting databases, and using best practices recommended by SR guidelines. Training opportunities for librarians on how to conduct an SR, such as those jointly provided by Cornell University, Carnegie Mellon University, and the University of Minnesota, are vital for increasing the knowledge base in evidence synthesis among librarians in the life sciences, social sciences, and physical sciences (Cornell University Library, 2022).

Additional Observations

While we tried not to judge the quality of a single publication based on the answers to the five questions about SR characteristics, we struggled with the 321 articles (19% of the final dataset) for which every answer was “No.” Throughout the process of analysis, we agreed to believe authors who stated categorically that their publication was an SR, but absent adherence to any of the standard characteristics, are these SRs? From our perspective as health sciences librarians the answer seems to be a resounding no. We acknowledge the possibility that some authors do not clearly understand what an SR is, or perhaps there is a different definition of SRs in those authors’ fields. We decided nonetheless to keep them in our dataset since each one illustrates a set of beliefs about what constitutes an SR. This data contributes to the current and developing landscape of evidence synthesis as it is adapted to new disciplines. These included articles may be SRs in name only, so it’s difficult to generalize the results to actual SRs outside the health sciences. However, a closer examination of these articles with five “No” answers and the fields they are published in may provide valuable information for institutions planning evidence synthesis efforts. One approach might be to sample this set of articles and analyze author demographics, and possibly interview authors to find out their preparation and knowledge of evidence synthesis.

Disciplines Outside the Health Sciences Publishing Systematic Reviews

The broad subject area that featured most prominently in our dataset are the physical sciences, closely followed by the social sciences and then less closely the life sciences. The five most common subject area classifications found in our dataset are social science, environmental science, business, computer science, and engineering (Figure 4). The social science subject classification was the most cited area classification, perhaps because it encompasses multiple fields such as education, sociology, social work, and other fields. Research examining specific subject areas in greater depth would allow for more nuanced conclusions about the growth of SRs in specific fields of research, as well as the development or adaptation of guidelines and standards to ensure high quality publications. Ultimately, the numbers and trends demonstrate that SRs are being undertaken in numerous fields outside health sciences. As the usefulness of SRs continues to be recognized, the expansion across all fields is likely to continue.

Limitations and Future Research

This study was designed to examine the characteristics and publication of non-HSSRs. We did not examine whether individual SRs used a quality appraisal instrument or checklist, and we were generous in determining whether an article should be coded as a “Yes” or a “No” when asking the five questions about SR characteristics. It is likely that in some cases, articles deemed as including a certain characteristic still suffered from methodological flaws or other limitations. No contact with authors was made to determine their reasoning for making the choices they did when producing their SRs.

It was also suggested that the term “systematic literature review” be added to the search strategy and the search re-run to capture additional data. We investigated the occurrence of this phrase in Scopus and opted to work with the original dataset, believing that it is a representative sample of recently published non-HSSRs.

Regarding fields found publishing non-HSSRs, the assignment of broad subject area categories and subject area classifications is made by Scopus at the journal level rather than the article level. While we believe this data illustrates broad trends, the subject area categories and subject area classifications assigned by Scopus may only tangentially reflect the actual subject of the article (Mongeon & Paul-Hus, 2016; Wang & Waltman, 2016). It would be useful to examine SRs in greater detail and analyze the subjects more precisely.

The authors realized part way through the analysis that Scopus provides a keyword for SRs which we did not use to filter our results. The keywords are assigned by the author or content provider so are not uniform, but may have provided additional SRs and insights (Elsevier B.V., 2021a). Finally, the last data collected were from mid-2019 and there will be more recent examples of SRs now.

The results of this research suggest additional questions to investigate. It would be helpful to track whether the percentage of non-HSSRs that follow or do not follow accepted standards is increasing or decreasing as more non-HSSRs are published, and if standards may become more uniform. This might be applied to a specific discipline, such as engineering or business, and tracked over time. Another research topic suggested by this study are the consequences of using specific standards designed for a subject area or discipline in an unrelated field. Librarians working with particular subject areas may be able to shed light on disciplinary differences at play as evidence synthesis continues to evolve. Another topic to investigate is the use of quality appraisal in non-HSSRs and what instruments have been or should be developed to accomplish it.

Conclusion

By examining the characteristics of a set of published non-HSSRs, we report evidence that SR research methods are being adopted in fields outside the health sciences. We also report that while some of the accepted standards for health sciences SRs are being adopted for non-HSSRs, few of them are universally and consistently observed. These findings have implications for librarians both inside and outside the health sciences who currently participate in SR projects or who anticipate requests for this service. Going beyond our original research questions, are specific SR standards being developed that diverge from the HSSR model, yet are equally rigorous and relevant? In some disciplines, this is indeed the case. Librarians who are involved in SRs outside the health sciences should become familiar with the standards and guiding documents for relevant disciplines, and may also be called on to participate in the development of these standards. Academic librarians both inside and outside the health sciences may be tasked with supporting evidence synthesis for their faculty and students as the uptake of evidence synthesis research continues to spread to social sciences, physical sciences, and life sciences.

Acknowledgments

We would like to gratefully acknowledge our three initial readers: Michelle Fiander, Anna Liss Jacobsen and Evan Meszaros, as well as the ISTL reviewers and editors, for their thoughtful questions and suggestions. Your contributions made this article so much better!

References

Aromataris, E., & Munn, Z. (2020). JBI manual for evidence synthesis. The Joanna Briggs Institute. https://jbi-global-wiki.refined.site/space/MANUAL

Baas, J., Schotten, M., Plume, A., Côté, G., & Karimi, R. (2020). Scopus as a curated, high-quality bibliometric data source for academic research in quantitative science studies. Quantitative Science Studies, 1(1), 377–386. https://doi.org/10.1162/qss_a_00019

Batten, J., & Brackett, A. (2021). Ensuring the rigor in systematic reviews: Part 3, the value of the search. Heart and Lung, 50(2), 220–222. https://doi.org/10.1016/j.hrtlng.2020.08.005

Brackett, A., & Batten, J. (2020). Ensuring the rigor in systematic reviews: Part 1, the overview. Heart and Lung, 49(5), 660–661. https://doi.org/10.1016/j.hrtlng.2020.03.015

Bramer, W. M., Rethlefsen, M. L., Kleijnen, J., & Franco, O. H. (2017). Optimal database combinations for literature searches in systematic reviews: A prospective exploratory study. Systematic Reviews, 6, 245. https://doi.org/10.1186/s13643-017-0644-y

Campbell Collaboration. (2022a). Campbell collaboration. https://www.campbellcollaboration.org/

Campbell Collaboration. (2022b). Methodological expectations of Campbell collaboration intervention reviews (MECCIR). https://www.campbellcollaboration.org/about-meccir.html

Centre for Reviews and Dissemination. (2022). Centre for reviews and dissemination. https://www.york.ac.uk/crd/

Clarke, M., & Chalmers, I. (2018). Reflections on the history of systematic reviews. BMJ Evidence-Based Medicine, 23(4), 121–122. https://doi.org/10.1136/bmjebm-2018-110968

Collaboration for Environmental Evidence. (2018). Guidelines and standards for evidence synthesis in environmental management (Version 5.0 and previous versions). https://environmentalevidence.org/information-for-authors/

Cornell University Library. (2022). A guide to evidence synthesis: Evidence synthesis institute for librarians. https://guides.library.cornell.edu/evidence-synthesis/trainings

de Almeida Biolchini, J. C., Mian, P. G., Natali, A. C. C., Conte, T. U., & Travassos, G. H. (2007). Scientific research ontology to support systematic review in software engineering. Advanced Engineering Informatics, 21(2), 133–151. https://doi.org/10.1016/j.aei.2006.11.006

Denyer, D., & Tranfield, D. (2009). Producing a systematic review. In The Sage handbook of organizational research methods. (pp. 671–689). Sage Publications Ltd.

Elsevier B.V. (2021a). How do author keywords and indexed keywords work? https://service.elsevier.com/app/answers/detail/a_id/21730/supporthub/scopus/

Elsevier B.V. (2021b). How do I search for a document? Find exact or approximate phrases or words. https://service.elsevier.com/app/answers/detail/a_id/34325/supporthub/scopus/https

Elsevier B.V. (2021c). What are the most used subject area categories and classifications in Scopus? https://service.elsevier.com/app/answers/detail/a_id/14882/supporthub/scopus/related/1/

Evidence for Policy and Practice Information and Co-ordinating Centre. (2022). EPPI-Centre. https://www.ucl.ac.uk/ioe/departments-and-centres/centres/evidence-policy-and-practice-information-and-co-ordinating-centre-eppi-centre

Foster, M. J., & Jewell, S. T. (2017). Assembling the pieces of a systematic review: A guide for librarians. Rowman & Littlefield.

Higgins, J. P. T., Thomas, J., Chandler, J., Cumpston, M., Li, T., Page, M. J., & Welch, V. A. (2019). Cochrane handbook for systematic reviews of interventions. Cochrane Collaboration. https://training.cochrane.org/handbook/current

Hoffmann, F., Allers, K., Rombey, T., Helbach, J., Hoffmann, A., Mathes, T., & Pieper, D. (2021). Nearly 80 systematic reviews were published each day: Observational study on trends in epidemiology and reporting over the years 2000-2019. Journal of Clinical Epidemiology, 138, 1–11. https://doi.org/10.1016/j.jclinepi.2021.05.022

Institute of Medicine. (2011). Finding what works in health care: Standards for systematic reviews (J. Eden, L. Levit, A. Berg, & S. Morton, Eds.). National Academies Press. https://doi.org/10.17226/13059

Jacobsen, S. M., Douglas, A., Smith, C. A., Roberts, W., Ottwell, R., Oglesby, B., Yasler, C., Torgerson, T., Hartwell, M., & Vassar, M. (2021). Methodological quality of systematic reviews comprising clinical practice guidelines for cardiovascular risk assessment and management for noncardiac surgery. British Journal of Anaesthesia, 127(6), 905–916. https://doi.org/10.1016/j.bja.2021.08.016

Kallaher, A., Eldermire, E. R. B., Fournier, C. T., Ghezzi-Kopel, K., Johnson, K. A., Morris-Knower, J., Scinto-Madonich, S., & Young, S. (2020). Library systematic review service supports evidence-based practice outside of medicine. The Journal of Academic Librarianship, 46(6), 102222. https://doi.org/10.1016/j.acalib.2020.102222

Katsura, M., Kuriyama, A., Tada, M., Tsujimoto, Y., Luo, Y., Yamamoto, K., So, R., Aga, M., Matsushima, K., Fukuma, S., & Furukawa, T. A. (2021). High variability in results and methodological quality among overlapping systematic reviews on the same topics in surgery: A meta-epidemiological study. British Journal of Surgery, 108(12), 1521–1529. https://doi.org/10.1093/bjs/znab328

Kitchenham, B. A. (2004). Procedures for performing systematic reviews (Keele University Technical Report TR/SE-0401). https://www.inf.ufsc.br/~aldo.vw/kitchenham.pdf

Kitchenham, B., & Brereton, P. (2013). A systematic review of systematic review process research in software engineering. Information and Software Technology, 55(12), 2049–2075. https://doi.org/10.1016/j.infsof.2013.07.010

Kocher, M., & Riegelman, A. (2018). Systematic reviews and evidence synthesis: Resources beyond the health sciences. College & Research Libraries News, 79 (5), 248-252.

Koffel, J. B. (2015). Use of recommended search strategies in systematic reviews and the impact of librarian involvement: A cross-sectional survey of recent authors. PloS One, 10(5), e0125931. https://doi.org/10.1371/journal.pone.0125931

Lam, M. T., & McDiarmid, M. (2016). Increasing number of databases searched in systematic reviews and meta-analyses between 1994 and 2014. Journal of the Medical Library Association, 104(4), 284–289. https://doi.org/10.3163/1536-5050.104.4.006

Li, L., Tian, J., Tian, H., Moher, D., Liang, F., Jiang, T., Yao, L., & Yang, K. (2014). Network meta-analyses could be improved by searching more sources and by involving a librarian. Journal of Clinical Epidemiology, 67(9), 1001–1007. https://doi.org/10.1016/j.jclinepi.2014.04.003

Ludeman, E., Downton, K., Shipper, A. G., & Fu, Y. (2015). Developing a library systematic review service: A case study. Medical Reference Services Quarterly, 34(2), 173–180. https://doi.org/10.1080/02763869.2015.1019323

McGowan, J., & Sampson, M. (2005). Systematic reviews need systematic searchers. Journal of the Medical Library Association, 93(1), 74–80. http://www.ncbi.nlm.nih.gov/pubmed/15685278

McGowan, J., Sampson, M., Salzwedel, D. M., Cogo, E., Foerster, V., & Lefebvre, C. (2016). PRESS peer review of electronic search strategies: 2015 guideline statement. Journal of Clinical Epidemiology, 75, 40–46. https://doi.org/10.1016/j.jclinepi.2016.01.021

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., & Group, P. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Journal of Clinical Epidemiology, 62(10), 1006–1012. https://doi.org/10.1016/j.jclinepi.2009.06.005

Mongeon, P., & Paul-Hus, A. (2016). The journal coverage of Web of Science and Scopus: A comparative analysis. Scientometrics, 106(1), 213–228. https://doi.org/10.1007/s11192-015-1765-5

Niforatos, J. D., Weaver, M., & Johansen, M. E. (2019). Assessment of publication trends of systematic reviews and randomized clinical trials, 1995 to 2017. JAMA Internal Medicine, 179(11), 1953–1594. https://doi.org/10.1001/jamainternmed.2019.3013

Page, M. J., McKenzie, J. E., Kirkham, J., Dwan, K., Kramer, S., Green, S., & Forbes, A. (2014). Bias due to selective inclusion and reporting of outcomes and analyses in systematic reviews of randomised trials of healthcare interventions. Cochrane Database of Systematic Reviews. (10), MR000035. https://doi.org/10.1002/14651858.MR000035.pub2

Petticrew, M., & Roberts, H. (2006). Systematic reviews in the social sciences: A practical guide. Blackwell Publishing

Redulla, R. (2016). Bias because of selective inclusion and reporting of outcomes and analyses in systematic reviews of randomized trials of healthcare interventions. International Journal of Evidence-Based Healthcare, 14(4), 183–185. https://doi.org/10.1097/xeb.0000000000000089

Rethlefsen, M. L., Farrell, A. M., Osterhaus Trzasko, L. C., & Brigham, T. J. (2015). Librarian co-authors correlated with higher quality reported search strategies in general internal medicine systematic reviews. Journal of Clinical Epidemiology, 68(6), 617–626. https://doi.org/10.1016/j.jclinepi.2014.11.025

Rethlefsen, M. L., Kirtley, S., Waffenschmidt, S., Ayala, A. P., Moher, D., Page, M. J., & Koffel, J. B. (2021). PRISMA-S: An extension to the PRISMA statement for reporting literature searches in systematic reviews. Systematic Reviews, 10, 39. https://doi.org/10.1186/s13643-020-01542-z

Rethlefsen, M. L., Murad, M. H., & Livingston, E. H. (2014). Engaging medical librarians to improve the quality of review articles. JAMA, 312(10), 999–1000. https://doi.org/10.1001/jama.2014.9263

Roth, S. C. (2018). Transforming the systematic review service: A team-based model to support the educational needs of researchers. Journal of the Medical Library Association, 106(4), 514–520. https://doi.org/10.5195/jmla.2018.430

Tranfield, D., Denyer, D., & Smart, P. (2003). Towards a methodology for developing evidence‐informed management knowledge by means of systematic review. British Journal of Management, 14(3), 207–222. https://doi.org/10.1111/1467-8551.00375

Uman, L. S. (2011). Systematic reviews and meta-analyses. Journal of the Canadian Academy of Child and Adolescent Psychiatry = Journal de l'Académie Canadienne de Psychiatrie de L'enfant et de l'Adolescent, 20 (1), 57–59.

Vinyard, M., & Whitt, J. (2016). Scopus. The Charleston Advisor, 18(2), 52–57.

Wang, Q., & Waltman, L. (2016). Large-scale analysis of the accuracy of the journal classification systems of Web of Science and Scopus. Journal of Informetrics, 10(2), 347–364. https://doi.org/10.1016/j.joi.2016.02.003

Appendix: Cited Systematic Review Standards and Guiding Documents

Campbell Collaboration. (2022b). Methodological expectations of Campbell collaboration intervention reviews (MECCIR). https://www.campbellcollaboration.org/about-meccir.html

Centre for Reviews and Dissemination. (2022). Centre for reviews and dissemination. https://www.york.ac.uk/crd/

Collaboration for Environmental Evidence. (2018). Guidelines and standards for evidence synthesis in environmental management (Version 5.0 and previous versions). https://environmentalevidence.org/information-for-authors/

de Almeida Biolchini, J. C., Mian, P. G., Natali, A. C. C., Conte, T. U., & Travassos, G. H. (2007). Scientific research ontology to support systematic review in software engineering. Advanced Engineering Informatics, 21(2), 133–151. https://doi.org/10.1016/j.aei.2006.11.006

Denyer, D., & Tranfield, D. (2009). Producing a systematic review. In The Sage handbook of organizational research methods. (pp. 671–689). Sage Publications Ltd.

Evidence for Policy and Practice Information and Co-ordinating Centre. (2022). EPPI-Centre. https://www.ucl.ac.uk/ioe/departments-and-centres/centres/evidence-policy-and-practice-information-and-co-ordinating-centre-eppi-centre

Higgins, J. P. T., Thomas, J., Chandler, J., Cumpston, M., Li, T., Page, M. J., & Welch, V. A. (2019). Cochrane handbook for systematic reviews of interventions. Cochrane Collaboration. https://training.cochrane.org/handbook/current

Kitchenham, B. A. (2004). Procedures for performing systematic reviews (Keele University Technical Report TR/SE-0401). https://www.inf.ufsc.br/~aldo.vw/kitchenham.pdf

Kitchenham, B., & Brereton, P. (2013). A systematic review of systematic review process research in software engineering. Information and Software Technology, 55(12), 2049–2075. https://doi.org/10.1016/j.infsof.2013.07.010

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., & Group, P. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Journal of Clinical Epidemiology, 62(10), 1006–1012. https://doi.org/10.1016/j.jclinepi.2009.06.005

Petticrew, M., & Roberts, H. (2006). Systematic reviews in the social sciences: A practical guide. Blackwell Publishing.

Pullin, A. S., & Stewart, G. B. (2006). Guidelines for systematic review in conservation and environmental management. Conservation Biology, 20(6), 1647–1656. https://doi.org/10.1111/j.1523-1739.2006.00485.x

Tranfield, D., Denyer, D., & Smart, P. (2003). Towards a methodology for developing evidence‐informed management knowledge by means of systematic review. British Journal of Management, 14(3), 207–222. https://doi.org/10.1111/1467-8551.00375

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

Issues in Science and Technology Librarianship No. 101, Fall 2022. DOI: 10.29173/istl2671